This post is a follow-up to my earlier post promoting digital flashcards as an effective study strategy for learners of all ages. In that post, I suggested that at times educators were anti rote learning assuming that strategies such as flashcards promoted a shallow form of learning that limited understanding and transfer. While this might appear to be the case because flashcards seem to involve a simple activity, the cognitive mechanisms that are involved in trying to recall and reflect on the success of such efforts provide a wide variety of benefits.

The benefits of using flashcards in learning and memory can be explained through several cognitive mechanisms:

1. Active Recall: Flashcards engage the brain in active recall, which involves retrieving information from memory without cues (unless the questions are multiple-choice). This process strengthens the memory trace and increases the likelihood of recalling the information later. Active recall is now more frequently described as retrieval practice and the benefits as the testing effect. Hypothesized explanations for why efforts to recall and even why efforts to recall that are not successful are associated not only with increased success at recall in the future but also broader benefits such as understanding and transfer offer a counter to the concern that improving memory necessarily is a focus on rote. More on this at a later point.

2. Spaced Repetition: When used systematically, flashcards can facilitate spaced repetition, a technique where information is reviewed at increasing intervals. This strengthens memory retention by exploiting the psychological spacing effect, which suggests that information is more easily recalled if learning sessions are spaced out over time rather than crammed in a short period.

3. Metacognition: Flashcards help learners assess their understanding and knowledge gaps. Learners often have a flawed perspective of what they understand. As learners test themselves with flashcards, they become more aware of what they know and what they need to focus on, leading to better self-regulation in learning

4. Interleaving: Flash cards can be used to mix different topics or types of problems in a single study session (interleaving), as opposed to studying one type of problem at a time (blocking). Interleaving has been shown to improve discrimination between concepts and enhance problem-solving skills.

5. Generative Processing: External activities that encourage helpful cognitive behaviors is one way of describing generative learning. Responding to questions and even creating questions have been extensively studied and demonstrate achievement benefits.

Several of these techniques may contribute to the same cognitive advantage. These methods (interleaving, spaced repetition, recall rather than recognition) increase the demands of memory retrieval and greater demands force a learner to move beyond rote. They must search for the ideas they want and effortful search activates related information that may provide a link to what they are looking for. An increasing number of possibly related ideas become available within the same time frame allowing new connections to be made. Connections can be thought of as understanding and in some cases creativity.

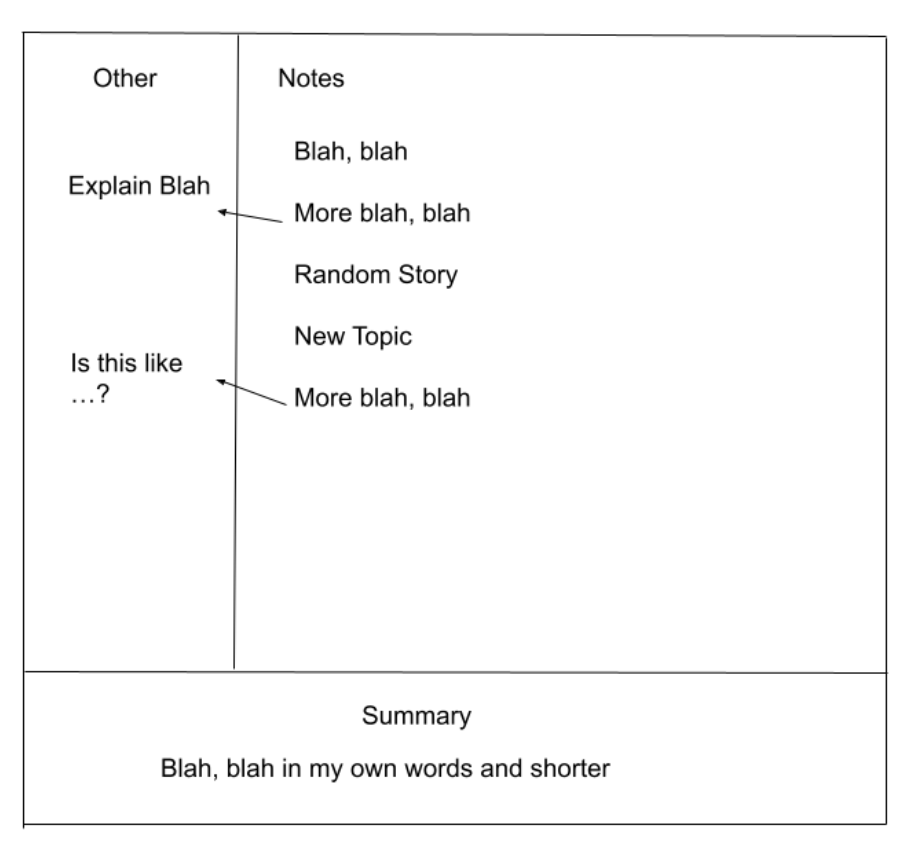

This idea of the contribution of challenge to learning can be identified in several different theoretical perspectives. For example, Vygotsky proposed the concept of a Zone of Proximal Development that position ideal instruction as challenging learners a bit above their present level of functioning, but within the level of what a learner could take on with a reasonable change of understanding. A more recent, but similar concept proposing the benefits of desirable difficulty came to my attention as the explanation given for why taking notes on paper was superior to taking notes using a keyboard. The proposal was that keyboarding is too efficient forcing learners who record notes by hand to think more carefully about what they want to store. Deeper thought was required when the task was more challenging.

Finally, I have been exploring researchers studying the biological mechanism responsible for learning. As anyone with practical limits on my time, I don’t spend a lot of time reviewing the work done in this area. I understand that memory is a biological phenomenon and cognitive psychologists do not focus on this more fundamental level, but I have also yet to find insights from biological research that required I think differently about how memory happens. Anyway, a recent book (Ranganath, 2024) proposes something called error-driven learning. The researcher eventually backs away a bit from this phrase suggesting that it does not require you to make a mistake but happens whenever you struggle to recall.

The researcher proposes that the hippocampus enables us to “index” memories for different events according to when and where they happened, not according to what happened. The hippocampus generates episodic memories. by associating a memory with a specific place and time. As to why changes in contexts over time matter, memories stored in this fashion become more difficult to retrieve. Activating memories with spaced practice both creates an effortful and more error-prone retrieval, but if successful offers a different context connection. So, spacing potentially offers different context links because different information tends to be active in different locations and times (note other information from what is being studied would be active) and involves retrieval practice as greater difficulty involves more active processing and exploration of additional associations. I am adding concepts such as space and retrieval practice from my cognitive perspective, but I think these concepts fit very well with Ranganath’s description of “struggling”.

I have used the term episodic memory in a little different way. However, the way Rangath describes changing contexts over time seems useful as an explanation for what has long been appreciated as the benefit of spaced repetition in the development of long-term retention and understanding.

When I taught educational psychology memory issues, I described the difference between episodic and declarative memories. I described the difference as similar to the students’ memory for a story and the memory for facts or concepts. I proposed that studying especially trying to convert the language and examples of the input (what they read or heard in class) into their own way of understanding with personal examples that were not part of the original content they were trying to process was something like converting episodic representations (stories) into declarative representations linked to relevant personal episodic elements (students’ own stories). This is not an exact representation of human cognition in several ways. For example, even our stories are not exact and are biased by past and future experiences and can change with retelling. However, it is useful as a way to develop what might be described as understanding.

So, to summarize, memory tasks, even what might seem to be simple ones such as might be the case with basic factual flashcards can introduce a variety of factors conducive to a wide variety of cognitive outcomes. The assumption that flashcards are useful only for rote memory is flawed.

Flashcard Research

There is considerably more research on the impact of flashcards that I realized and some recent studies that are specific to digital flashcards.

Self-constructed or provided flashcards – When I was still teaching the college students I say using flashcards were obviously using paper flashcards they had created. My previous post focused on flashcard tools for digital devices. As part of that post, I referenced sources for flashcards that were prepared by textbook companies and topical sets prepared by other educators and offered for use. I was reading a study comparing premade versus learner-created flashcards (description to follow) and learned that college students are now more likely to use flashcards created by others. I guess this makes some sense considering how digital flashcard collections would be easy to share. The question then is are questions you create yourself better than a collection that covers the material you are expected to learn.

Pan and colleagues (2023) asked this question and sought to answer it in several studies with college students. One of the issues they raised was the issue of time required to create flashcards. They controlled the time available for the treatment conditions with some participants having to create flashcards during the fixed amount of time allocated for study. Note – this focus on time is similar to the retrieval practice studies using part of the time in the study phase for responding to test items while others were allowed to study as they liked. The researchers also conducted studies in which the flashcard group created flashcards in different ways – transcription (typing the exact content from the study material), summarization, and copy and pasting. The situation investigated here seems similar to note-taking studies comparing learner-generated notes and expert notes (quality notes provided to learners). With both types of research, one might imagine a generative benefit to learners in creating the study material and a completeness/quality issue. The researchers did not frame their research in this way, but these would be alternative factors that might matter.

The results concluded that self-generated flashcards were superior. They also found that copy-and-paste flashcards were effective which surprised me and I wonder if the short time allowed may have been a factor. At least, one can imagine using copy and paste as a quick way to create the flashcards using the tool I described in my previous flashcard post.

Three-answer technique – Senzaki and colleagues (2017) evaluated a flashcard technique focused on expanding the types of associations used in flashcards. They proposed their types of flashcard associations based on the types of questions they argued college students in information-intensive courses are asked to answer on exams. The first category of test items are verbatim definitions for retention questions, the second are accurate, paraphrases for comprehension questions, and the third are realistic examples for application questions. Their research also investigated the value of teaching students to use the three response types in comparison to requesting they include these three response types.

The issue of whether students who use a study technique (e.g., Cornell notes, highlighting) are ever taught how to use a study strategy why it might be important to apply the study in a specific way) has always been something I have thought was important.

The Senzaki and colleagues research found their templated flashcard approach to be beneficial and I could not help seeing how the Flashcard Deluxe tool I described in my first flashcard post was designed to allow three possible “back sides” for a digital flashcard. This tool would be a great way to implement this approach.

AI and Flashcards

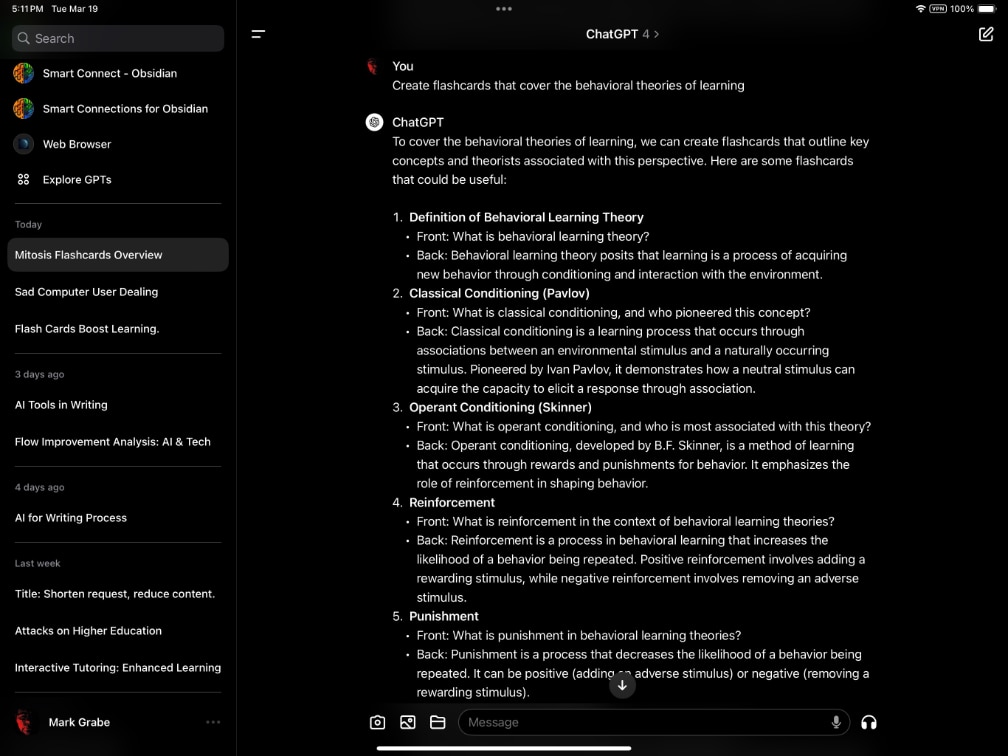

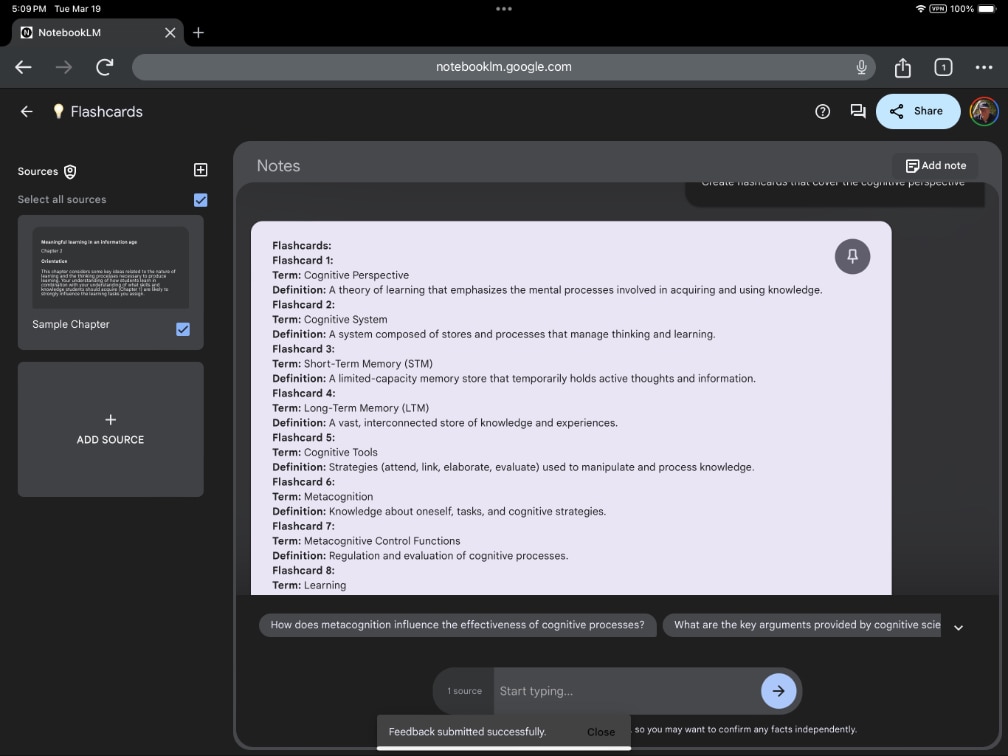

So, while learner-generated flashcards offer an advantage, I started to wonder about AI and was not surprised to find that AI-generated capabilities are already touted by companies providing flashcard tools. This led me to wonder what would happen if I asked AI tools I use (ChatGPT and NotebookLM) to generate flashcards. One difference I was interested in was asking ChatGPT to create flashcards over topics and NotebookLM to generate flashcards focused on a source I provided. I got both approaches to work. Both systems would generate front and back card text I could easily transfer to a flashcard tool. I found that some of the content I decided would not be particularly useful, but there were plenty of front/back examples I thought would be useful.

The following image shows a ChatGPT response to a request to generate flashcards about mitosis.

This use of AI used NotebookLM to generate flashcards based on a chapter I asked it to use as a source.

This type of output could also be used to augment learner-generated cards or could be used to generate individual cards a learner might extend using the Senzaki and colleagues design.

References

Pan, S. C., Zung, I., Imundo, M. N., Zhang, X., & Qiu, Y. (2023). User-generated digital flashcards yield better learning than premade flashcards. Journal of Applied Research in Memory and Cognition, 12(4), 574–588. https://doi-org.ezproxy.library.und.edu/10.1037/mac0000083

Ranganath, C. (2024). Why We Remember: Unlocking Memory’s Power to Hold on to What Matters. Doubleday Canada.

Senzaki, S., Hackathorn, J., Appleby, D. C., & Gurung, R. A. (2017). Reinventing flashcards to increase student learning. _Psychology Learning & Teaching, 16(3), 353-368.

![]()

You must be logged in to post a comment.