The period since the end of the COVID lockdown has been difficult for K-12 schools. Students have struggled to attain traditional rates of academic progress. Student absenteeism has reached alarming levels. Where we live some districts have struggled to find teachers to fill vacant positions and other districts are now cutting staff because of massive budget overruns. Educators feel a lack of support from politicians and groups of parents complaining about various issues and sometimes pulling their children out of one school to enroll them in another.

I certainly have no answer to this collection of challenges as it seems a proposed solution to one problem only leads to complaints due to consequences from that recommendation for a different issue. I look to educational research for potential solutions and there are certainly strategies with proven positive consequences in specific areas, but this is as far as it seems practical to go.

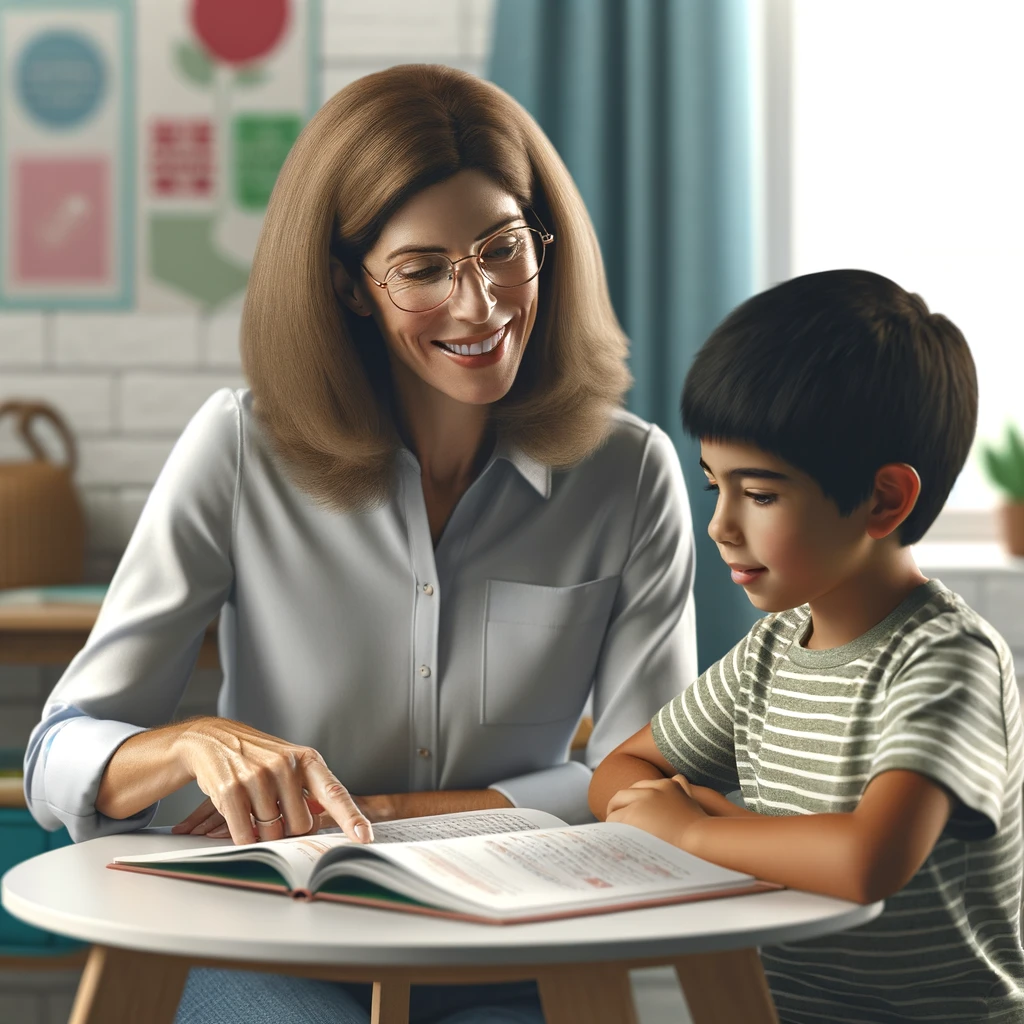

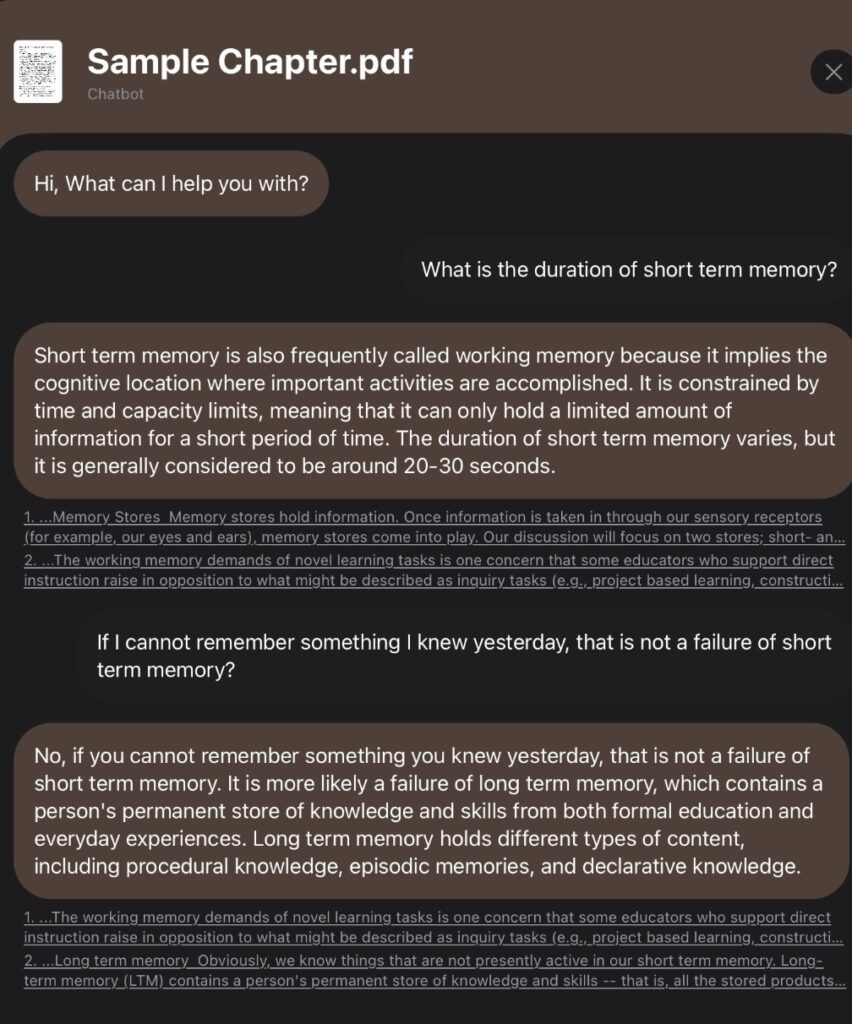

Tutoring has been one of the recent areas I have been investigating. My specific interest has been in the potential of AI as a substitute for human tutors, but the ample research on the effectiveness of human tutors has also been a fruitful ground for considering what proven experiences and roles AI might be applied to approximate. I have continued to review what we know about the effectiveness of human tutors and what it is tutors provide that students do not receive from their traditional classroom experiences.

For those wanting to review this research themselves, I recommend a fairly recent working paper by Nickow and colleagues (2020). This paper is both a general descriptive review of how tutoring is practiced and a meta-analysis of a large number of quality studies (96) of the effectiveness of this addition to K12 instruction. The meta-analysis considers only the impact of adult tutors, but the descriptive review does include some comments on both peer tutoring and technology-based tutoring. The technology comments were written before recent advances in AI. My summary of this paper follows.

Key Findings

I will begin with the overall conclusion. While there are more and less effective implementations and situations, adult tutoring consistently results in positive outcomes. The authors concluded that tutoring was responsible for substantial and consistent positive effects on learning outcomes, with an overall effect size of 0.37 standard deviations. The results are the strongest for teacher-led and paraprofessional tutoring programs, with diminished but still positive effects for nonprofessional and parent-led tutoring. Notably, tutoring is most effective in the earlier grades and shows comparable impacts across both reading and mathematics interventions, although reading tends to benefit more in earlier grades and math in later ones. A large proportion of the studies and tutoring activity appears focused in the first couple of years of school and in the subject area of reading. There are also far more studies of tutoring in the early grades than in secondary school. Only 7% of the studies included students above 6th grade.

Categories of tutors

The study by Nickow, Oreopoulos, and Quan (2020) identifies four categories of tutors:

- Teachers: These are certified professionals who have undergone formal training in education. They are typically employed by schools and likely have a deeper understanding of the curriculum, teaching strategies, and student assessment.

- Paraprofessionals: These individuals are not certified teachers, but they often work in educational settings. Paraprofessionals may include teaching assistants, educational aides, or other support staff in schools. They often work under the supervision of certified teachers.

- Nonprofessionals: This category typically includes volunteers who may not have formal training in education. They could be community members, older students, or others who offer tutoring services. Their level of expertise can vary widely.

- Parents: Parents often play a tutoring role, especially in the early years of a child’s education. Parental involvement in a child’s education can have a significant impact, but the level of expertise and the ability to provide effective tutoring can vary greatly among parents.

The study found that paraprofessionals are the largest provider of tutoring services, followed by nonprofessionals. The effectiveness of tutoring was found to be higher when provided by teachers and paraprofessionals compared to nonprofessionals and parents. Tutors who have teaching certificates and classroom experience were rare, but most effective in the research studies. As mentioned in the introduction to this post, the question of how schools must apply their budgets appears highly influential in whether known sources of impact can be applied.

Mechanisms of Tutoring Effectiveness

This paper reviewed mechanisms potentially responsible for the effectiveness of tutoring. While these hypothesized mechanisms were not evaluated in the meta-analysis, I find such lists useful in considering other approaches such as AI. Which of these mechanisms could AI tutoring address and which would not be possible?

The paper discusses several mechanisms through which tutoring enhances student learning:

- Increased Instruction Time: Tutoring provides additional instructional time, crucial for students who are behind.

- Customization of Learning: The one-on-one or small group settings of tutoring allow for tailored instruction that addresses the individual needs of students, a significant advantage over standard classroom settings where diverse learning needs can be challenging to meet. The authors propose that tutoring might be thought of as an extreme manipulation of class size. I know that studies of class size have produced inconsistent results extremely small class sizes are not investigated in such research.

- Enhanced Engagement and Feedback: Tutoring facilitates a more interactive learning environment where students receive immediate feedback, enhancing learning efficiency and correction of misunderstandings in real time.

- Relationship Building: The mentorship that develops between tutors and students can motivate students, fostering a positive attitude toward learning and educational engagement.

Program Characteristics

The document evaluates the variation in the effectiveness of tutoring programs based on several characteristics:

- Tutor Type: Teacher and paraprofessional tutors are found to be more effective than nonprofessionals and parents, suggesting that the training and expertise of the tutor play a critical role in the success of tutoring interventions.

- Intervention Context: Tutoring programs integrated within the school day are generally more effective than those conducted after school, possibly due to higher levels of student engagement and less fatigue.

- Grade Level: There is a trend of diminishing returns as students age, with the most substantial effects seen in the earliest grades. This finding emphasizes the importance of early intervention.

Summary

This research and analysis paper might be summarized by proposing that schools cannot go wrong with tutoring. The paper suggests several policy implications based on its findings. Given the strong evidence supporting the effectiveness of tutoring, especially when conducted by trained professionals, the authors advocate for increased investment in such programs. They suggest that education policymakers and practitioners should consider tutoring as a key strategy for improving student outcomes, particularly in early grades and for subjects like reading and mathematics

Reference

Nickow, A., Oreopoulos, P., & Quan, V. (2020). The impressive effects of tutoring on prek-12 learning: A systematic review and meta-analysis of the experimental evidence. https://www.nber.org/papers/w27476.

![]()

You must be logged in to post a comment.