Many of my posts describe tools and methods for externalizing and encouraging learning by recording some form of written notes. Recent versions of the approaches and tools have emphasized digital tools and concepts such as Smart Notes and a Second Brain. Note-taking has a long history with a large volume of research focused mainly on the use of notes in academic settings. This setting and this research were a major focus of my professional career. Now, with more time, I have broadened my focus beyond the classroom and the format of notes typically recorded in that setting. Other formats have unique practitioners and approaches that often exist in silos and it seems possible greater awareness of these different traditions offers new opportunities.

I am not going to review past posts here that have emphasized taking digital notes, but propose that such approaches can be compared with two other categories commonplace books and readers’ notebooks (used here as a formal term).

Commonplace books have been around forever and the commonplace books kept by famous creative people are sometimes explored for their historical significance (e.g., Da Vinci ). Commonplace books are often divided by topic and thus are different from a diary which is organized sequentially by date. Commonplace books were often collections of quotes copied from books and organized into topics.

A reader’s notebook is a tool used by readers to track their reading, reflect on texts, and engage more deeply with literature. It is often used in classrooms, book clubs, or personal reading routines. While the specific components of a reader’s notebook can vary depending on its purpose, here are the most common components.

- A reading log: books that have been read (title, author, date read) and books to be read. What was the personal rating of the book?

- Book summaries and notes: Important quotes, key ideas, themes

- Reflections and responses: reactions and potential applications. Would book be recommended?

- Characters and plot: Appropriate for works of fiction.

- Vocabulary: unfamiliar words with definitions encountered while reading

- Questions and predictions: Questions related to the text. What is the author trying to say? How do I think this will end? Am I interpreting this correctly?

- Connections: Text-to-self. Text-to-other texts. Text-to-life or world experiences

- Visuals: charts, diagrams, drawings copies or created.

- Related books: other books by author or related relevant works. Author bio.

- Discussion notes: class or book club notes from discussions.

- Production goals: are there projects that might follow from the content of the book?

These components could be headings entered in a blank notebook (paper or digital) or could be scaffolded in some way. One common technique used in K12 classrooms making use of Google Classroom is to create and share a Google Slide file with slides prepared as templates for different assigned components. The user (student) can then duplicate slides from the templates as needed to create their Notebook. A cottage industry has sprung up among educators preparing and selling the collections of templates on outlets such as “Teachers Pay Teachers”.

For those interested, here is a tutorial outlining how to set up Readers’ Notebooks, a great example of the type of template collection one could find and purchase, and just so you don’t get the impression this learning tool only applies in K12 a higher ed example.

Readers’ Notebooks and Commonplace Books are both tools for recording and organizing thoughts, ideas, and information, often related to reading or personal reflection. While they share some similarities, they also have distinct purposes and methods of use. Here’s a breakdown of what I think are frequent differences in practice.

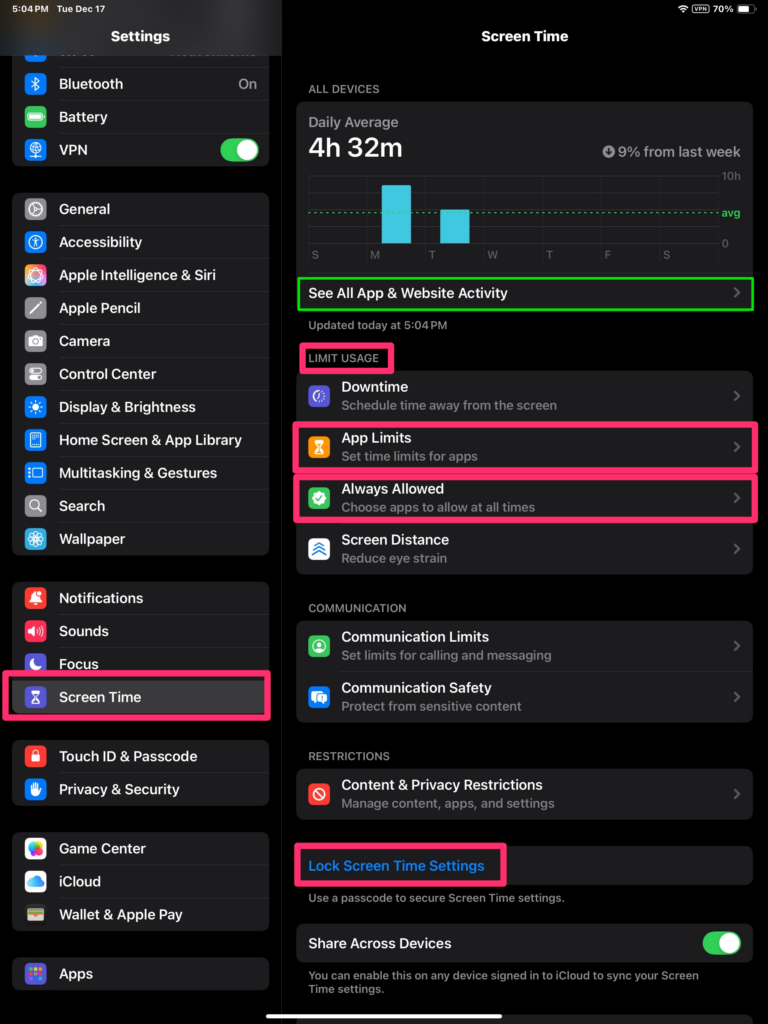

Basic Comparison of Reader’s Notebook and Commonplace Book

| Reader’s Notebook | Commonplace Book | |

| Primary Purpose | Engage with and process textbooks and personally selected books | Process and store content from a variety of sources |

| Learning goals | Primary use is typically focused on assigned texts and improvement of reading skill | Used to collect and synthesize knowledge across interests |

| Typical context | Most commonly used in educational settings or book clubs | Used by scholars, writers, and thinkers to compile knowledge |

| Format | Can be freeform, but commonly structured using templates in educational settings | Commonly freeform |

| Intended duration | In academic setting, duration is the length of a course or to tract reading interests and performance through multiple grades | Intended for long-term accumulation of potentially useful information and thoughts. |

The Continuum

I wonder if Readers’ Notebooks, Commonplace Books, and Tools for building long-term notetaking systems might be positioned along a continuum with different goals being emphasized within transitions that all are based on the desire to document learning experiences. Transitions might be applied that include level of formal structure, unit of information and means of connecting, expectations of the modification of source units over time, likelihood content will be shared directly with others, and degree to which approach is intended to feed into external products versus documenting and examining personal experiences.

One of my personal interests has always been whether learners are taught and coached on their efforts to externalize learning experiences. As a college prof interested in the hows and whys of taking notes, I observed that so many students just kind of wrote stuff down without previous formal discussions concerning specific tactics and explanations of why specific tactics were being promoted. I wonder if the template-oriented approach of the Reader’s Notebook with the common practice of sharing with classmates and the teacher might represent a way to develop insights and skills related to taking notes.

![]()

You must be logged in to post a comment.