The Concept of Disruption Applied to Education

I think of Clayton Christensen as a theorist and writer mainly focused on business. He seems to focus on the challenges of change. Two core ideas have stuck with me. First is the concept of the innovator’s dilemma, which describes the difficulty of moving on from a successful approach when faced with new circumstances. Why does success at one point limit future success. The second core topic considers the opportunities and risks that arise when a field encounters great disruption. These two big ideas are interrelated, as great disruptions may create circumstances challenging an existing successful approach.

Big ideas are not necessarily specific to a given field (e.g., business) and Christensen has made the effort to apply his theories to education. This effort resulted in his 2008 book Disrupting Class, written with Michael Horn and Curtis Johnson. For those interested in K12 education, it should not be difficult to anticipate how Christensen’s core ideas might apply. The methods of K12 teaching are often criticized as unchanging often noting that someone from a century ago would not be lost if suddenly dropped into a classroom of today. The long-standing model involves age-based classes of students reporting to a teacher standing at the front of a room, engaging the class with presentations and discussions covering isolated subjects of math, science, history, and language arts with performance evaluated using tests at regular intervals. This model has existed in pretty much the same approach for a long period of time and seems very resistant to change.

While stable, the K12 model faces significant challenges. Students are often unmotivated with high absentee rates. Despite significant resources invested in the U.S., performance measured by standardized tests lags behind that observed in other countries. The COVID crisis appears to have created problems that remain when students could not meet in the traditional face-to-face classroom situation. Despite the mantras of “all students succeed” and “leave no child behind”, student performance has grown increasingly more variable as students move through the grade levels, bringing many questions of meeting the needs of a diverse population of learners into focus.

These issues exist within an environment of educator complaints of low pay and a lack of public support, with increasing pressure to address an ever-increasing number of goals. Why aren’t students more capable of understanding the basics of money management? Why are kids spending so much time on their phones and reading so little? Why are kids questioning their sexual orientation? Why can’t K12 schools do a better job of preparing those students who are interested in a job after high school rather than pursuing the experiences of higher education? Why do kids have so many psychological problems?

Rereading Disrupting Class

After the delay of a decade or so, I just reread Christensen’s Disrupting Class, which turned out to be an interesting experience in a couple of ways. First, I don’t reread many books because there always seems to be something new I want to read, but the investment of time may be beneficial with a certain type of book. Rereading books that gave you important insights into an emerging or changing situation can be quite informative. The delay between reads allows the accumulation of relevant experiences that allow further reflection on your original conclusions and insights. These intervening experiences can also guide rereading to discover nuances that did not initially stand out. There is more. Some writers who explore an emerging trend are willing to predict specific accomplishments and related time frames. What actually happened? Were the predictions realized, and if not, what factors turned out to prevent the predicted outcomes?

I became interested in Christensen’s analysis of the challenges of K12 education because I thought the challenges I have already outlined could be addressed through the use of technology to individualize learning opportunities in ways that addressed differences in aptitude, existing knowledge, and preferences for different approaches to learning (Christensen used Howard Gardner’s theory of multiple intelligences as a justification for alternate learning experiences.). When translating these suggestions to my own way of thinking about instructional alternatives, I equated Christensen’s suggestions with an updated way of implementing mastery learning, taking advantage of online technology. This was not the term used in his book, but Christensen did mention k12.org as one of his examples and I would define this as an example of online mastery instruction.

Christensen observed that disruptive practices tend to emerge in the margins with mainstream practices being very resistant to disruption. Established mainstream practices fall prey to the innovator’s dilemma, with efforts limited to doing a better job of what is already being done. He predicted that home schooling, credit recovery experiences (a way to address failed or incomplete courses), and unique courses that cannot draw enough students in an individual school would provide the earliest exposure for online, individualized learning experiences.

While online, digital approaches have succeeded on the fringe, these successes are intended to encourage more mainstream adoption. Now this is what I mean by having the opportunity to test predictions. Implementation has not progressed as anticipated. For example, Christensen predicted that by 2019, about 50% of high school courses would be delivered online. This prediction proved to be overly optimistic, and I would guess that any movement in that direction was reversed by negative experiences associated with the total forced online efforts of the COVID years. There are other failed predictions. One concerns the alternative educational materials that would be available to satisfy the multiple intelligences Christensen identified. Rather than a single, common textbook, he predicted that technology would mature to the point that educators and student peers could create learning materials. Course management systems (CMSs) were emerging and these systems would serve to store, sequence, and provide options generated by commercial providers as well as educators themselves. Perhaps some attempts have been made in this direction. I would point to concepts such as Google app-based Hyperdocs and teacher-based content production processes such as Teachers Pay Teachers (TPT) as consistent with this prediction, but the scale of adoption has been small.

I spent some of my time teaching in a graduate program focused on Instructional Design and I did find the concept of teachers as instructional designers of interest. To this point, educators are not prepared as designers and instructional design is different from lesson planning. Teachers would certainly have the background to become instructional designers, but like so many things educators could do, there is always the issue of how their time should be allocated.

Is technology a tool for disruption or will it be limited to isolated and focused improvements?

Perhaps technology is not a tool that will result in educational disruption, but will play a more focused role in improving some specific areas of what we already do. The alternative could be that technology provides the only reasonable means to break free from an approach that seems to be floundering in complexity, inefficiency, and frustration. Perhaps Christensen succumbed to Amara’s law. You may have heard of this but not know to whom the argument has been attributed. When associated with progress involving technology, Amara proposed that we tend to overestimate the impact of technology in the short term and underestimate the impact in the long term. Perhaps we are too impatient.

I understand that one criticism of those who advocate alternatives to the status quo is that when an alternative does not seem to generate much change, the reaction is to claim “you didn’t do it right”. There is certainly some legitimacy in the claim that people are reluctant to abandon good ideas when the ideas do not result in practical successes. Keeping this in mind in combination with the limited success of current approaches, I still believe there are some areas of potential.

Here are some examples:

Teachers can create instructional materials efficiently with the use of AI. Specialized AI tools (e.g., eduaide.ai, Diffit) assist educators in creating instructional materials customized to suit students who prefer different types of learning experiences.

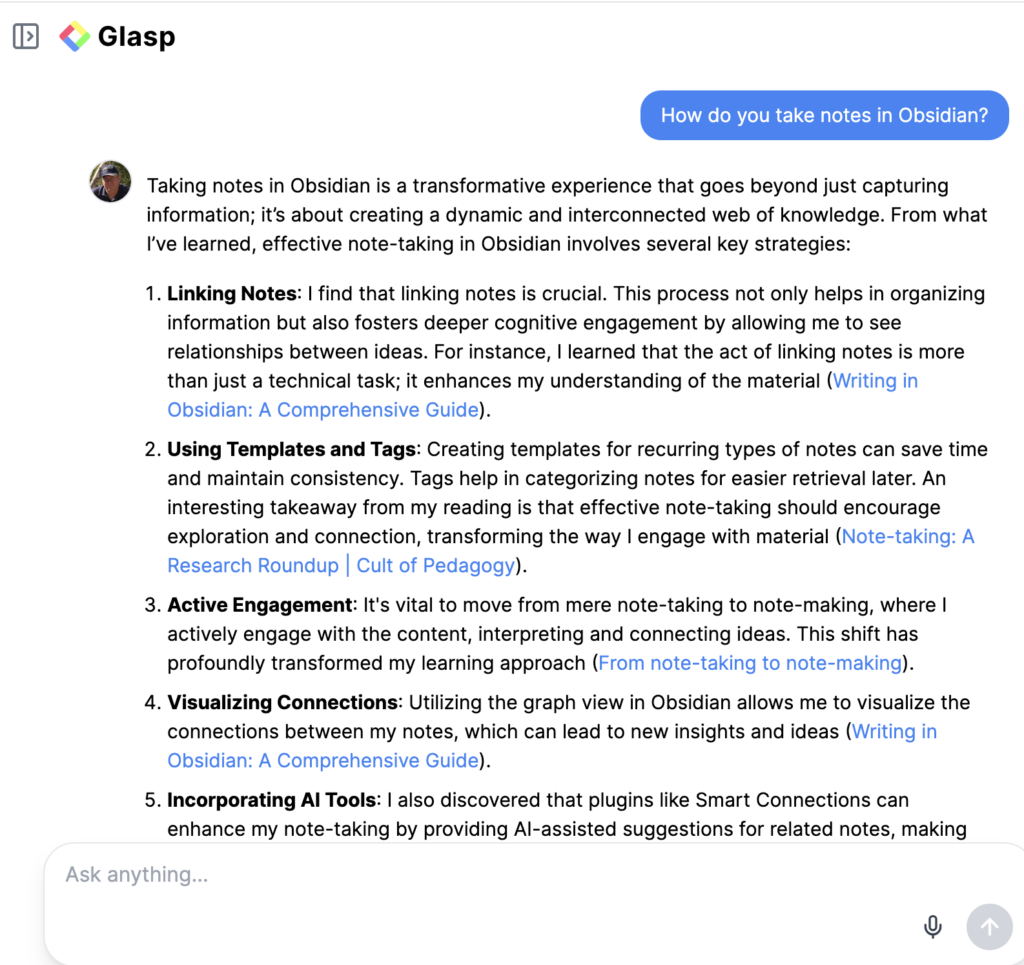

AI offers the opportunity for personalized tutoring. Christensen refers frequently to the impact of tutoring, but he uses tutoring to refer to instructional tutorials. It is true that there are certain ways in which tutorials provide individualized learning experiences. When I think of tutoring, and this has been a topic I have always found interesting, I tend to think of a one-to-one interaction with another individual. Again, AI can approximate this experience and is more accessible and economical than traditional tutors. I have written several posts focused on AI tutors and I encourage educators to explore this possibility. The common complaint that technology is isolating is worth considering. As an adult independent learner, I would counter that all of us end up learning in isolation. Having suggested developing the skills of independent learning to be essential, I do note Christensen lists two requirements that must be met to motivate K12 learners?—?a way to feel competent as a learner and social connections. I agree and see peer engagement within an AI tutoring environment as a very reasonable approach. This strategy offers a combination of AI and peer tutoring.

Individualized learning opportunities?—?I am not ready to give up on online, individualized learning experiences. These experiences might best be used as a supplement to other classroom experiences (i.e., as an alternative to a textbook) or assigned for enhancement or remedial purposes. Khan Academy offers a flexible environment and set of tools that should be available for assignment by educators or access by individual learners.

I am not ready to dismiss the role technology can play in K12 education. I am waiting for those who point to limitations to offer reasonable options.

![]()

You must be logged in to post a comment.