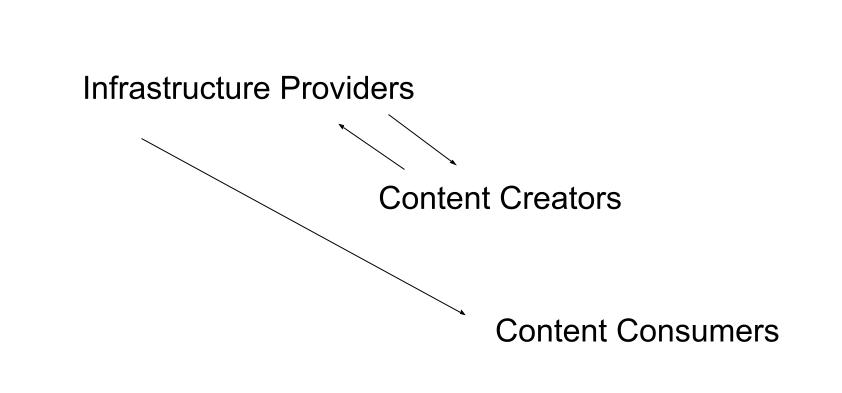

I want to offer an analysis of what I think the rights and responsibilities of the parties involved in producing, sharing, and consuming online content should be. The parties that make up this system seem obvious. What I propose is that we consider the rights and responsibilities of these three parties more carefully.

Content creators: Whether it is text, images, or videos delivered as part of a blog or social media site, content is present because someone created it. I suppose I now have to amend this claim to add “unless it was created by AI”. I created this post. I assume you have created content as well.

Content consumers: Those who view or listen to online content are consumers of that content.

Infrastructure Providers: Infrastructure providers provide the bridge between content creators and content consumers. Providers offer hosting services that accept input from creators and provide it to consumers. Infrastructure providers would include companies such as Google (Blogger), Facebook, X, Medium, Substack, companies that rent hosting services to creators (I use Bluehost), etc.

The identification of these three interconnected parties seems straightforward. Now, what about rights and responsibilities? I think of rights and responsibilities as related to the flow of information. A party either accepts the conditions established earlier in the flow or refuses to participate. A lack of participation may encourage the parties already involved to modify their expectations. When describing the rights and responsibilities among these parties, what I propose here is what I consider ethical rather than what is possible. There are ways around nonparticipation as the only option. I regard these approaches as unethical.

Content creators and infrastructure providers have early roles in this flow. At this point, what are the expectations of the infrastructure provider? What are the conditions to be assumed by the content creator? Does the infrastructure provider assume content presentation is their exclusive opportunity or can the content creator offer the same content under other conditions by other providers? Does the provider assume any editorial control beyond the rejection of obvious inappropriate material? Does the infrastructure provider pick and choose what they will accept? Revenue opportunities must be clearly understood. Are decisions made independently (e.g., ads can be added, subscription fees charged) or are such decisions collaborative and are the parties subject to a specified method for sharing any income generated?

As for the infrastructure provider and the consumer, the flow of content must be based on transparency. The ethical options for the consumer are to use the content as provided or not, but this must be an informed decision. In situations in which personal information is being collected to compensate the provider and the content creator, the consumer must understand what information is being collected and for what purpose. An important distinction here is between the use of information to target ads that directly support the infrastructure provider and content creator and the collection of information for a secondary purpose (e.g., third party ads that collect information that is used by or sold to other parties not associated with the exchange between the consumer and the infrastructure provider). Consumers should have a way to determine if either or both methods for collecting their information are being used.

The content creator and the consumer have a similar relationship. If the content requires a subscription, it is obvious what the consumer is expected to provide in compensation for the content. Including ads to generate revenue is murkier. I don’t accept that the consumer has a right to block an ad as both the infrastructure provider and the content creator have a right to compensation for content that is being consumed.

Are arrangements that work available?

The only arrangements that work in satisfying what I consider the rights and responsibilities of all parties are subscription services (e.g., Substack, Medium). It is clear what all parties contribute and get with subscription services.

I do think ad-supported system can work unless purposefully misused. Ad revenue used to work in other industries (television), but cable successfully challenged this approach by offering subscription content. In that case, consumers wanted more than a system that worked at one level. but did not provide enough.

The example I would offer to support a better ad-based approach is Brave. Brave allows content creators to sign up to receive compensation when content consumers using Brave view ad-supported content from these providers. When consumers make this commitment, they can receive compensation from companies using Brave to display ads. Yes, I said consumers receive compensation for viewing ads. Brave then provides a way those receiving this revenue can compensate the content creators. Brave takes a cut when users make this commitment.

The Brave system works when content creators are paying to share their content. Then, if consumers accept ads (they can use the system to just block ads) and if these consumers then share revenue generated with content creators, the system works. So, I pay Bluehost to provide infrastructure for sharing my blog. I have enrolled my blog with Brave. If consumers make use of Brave and use the revenue they receive for viewing ads to compensate content creators, then I should receive compensation based on how frequently my content is viewed.

I like this system as an example of what is possible. I am also realistic and will indicate that I spend far more to rent the infrastructure I use to share content than I receive from the Brave system. Part of my deficit is my responsibility. Whether readers visit my content or not is not the responsibility of the infrastructure I rent or Brave. However, I also cannot control whether a content consumer uses the Brave browser and that part of the system is not my responsibility. This is what I mean by a system that potentially address what all contribute and receive from a system.

Those readers not using Brave see Google ads rather than Brave ads on my blog.

Here is an earlier post I wrote about the Brave environment

Summary

I think there are long-term realities that will control the online experience related to how the parties involved are compensated. There are legitimate concerns related to the collection of personal information to target ads and there are legitimate concerns for how costs of operation and the compensation of content creators will be covered. Subscription-based services are one solution. This seems to be what is happening with television and more and more with online content. I think a different ad-based approach is also possible. This post attempted to offer an analysis of the factors that are responsible for the pressures for change.

![]()

You must be logged in to post a comment.