Note-taking is a foundational skill that supports comprehension, retention, and critical thinking. However, many students below high school age struggle to develop effective note-taking habits on their own. There is comparatively little research on the development of note-taking skills in K12 compared to higher education, and within K12, the development of note-taking skills in elementary and middle schools receives extremely little attention. Still, from typically fourth grade on, it is commonly accepted that the goal in reading skills switches from learning to read to reading to learn.

While I have always been interested in note-taking research and practice, my wife and I worked with a second-grade teacher to explore our early ideas about the creation of multimedia projects as learning opportunities. Pam Carlson, our elementary teacher colleague, was preparing her students to participate in what we called the butterfly project, in which they were learning about the life stages of butterflies, migration, and other relevant topics related to butterflies. Students were creating a HyperCard stack to share what they had learned, and each student selected a specific butterfly to describe in the card they created for the stack. In reviewing various books Pam provided students as resources, she offered the following instructions to guide the information students wrote down. When you find something you want to include, she suggested, think about that information carefully and then turn your book over so you will write down what you learned without copying from the book. I could rephrase her instructions in the way researchers would describe the goals of her required strategy (summarization, personalization, generative processing, etc.) when I taught or wrote about note-taking, but these concrete instructions still pop into my memory when I address the topic.

This post summarizes the few studies I was able to locate that I thought would be relevant to educators who work with younger students and I will try to describe what seems to me to be the implications for classroom implementation. As always, more work is needed. One basic observation that probably seems obvious to most educators, the studies I reference here, Ilter (2017), Lee et al. (2013), and Chang & Ku (2014), highlight the importance of explicit instruction and scaffolded strategies to help young learners master note-taking skills. An interesting generality about note-taking seems to be that while nearly all learners take notes in some form or another, few of any age experience direct instruction and evaluation of this important skill.

Below, you will find key recommendations from these studies to help educators guide their young students toward becoming capable note-takers.

1. Explicit and Scaffolded Instruction of Note-Taking Strategies

One of the most important takeaways from the research is that note-taking skills should not be left to chance. Ilter (2017) emphasizes the need for early and explicit instruction in note-taking, starting in elementary school. Students often lack the intuitive ability to identify key information or organize their notes effectively, so educators must provide clear guidance.

Scaffolding is a critical component of this instruction. As the word implies, scaffolds are supports offering structure. A partial outline makes a reasonable example. Teachers should begin by modeling note-taking strategies and gradually shift responsibility to students as they gain confidence. For example, early lessons might involve guided practice with teacher feedback, while later lessons encourage students to take notes independently. This gradual release of responsibility ensures that students build the skills they need to succeed on their own.

2. Writing in Their Own Words

One of the biggest challenges for young students is avoiding verbatim copying. As I previously mentioned, Pam Carlson’s strategy for her second-grade students is noteworthy. Ilter (2017) and Lee et al. (2013) stress the importance of teaching students to paraphrase information in their own words. This practice not only improves comprehension but also helps students engage more deeply with the material. A related skill was brevity. One researcher liked the label “terse”. So, the goal was not just to paraphrase, but to focus on key or interesting ideas.

To support this skill, educators can:

- Model how to paraphrase by thinking aloud during lessons. A think-aloud is simply an effort to externalize your thinking. It is a common strategy suggested to help learners get a grasp on mental behaviors they cannot see.

- Provide practice exercises where students rewrite sentences or paragraphs in their own words.

- Emphasize the value of organizing information logically, rather than simply copying it.

By focusing on paraphrasing and organization, students can develop a more meaningful understanding of the material they are studying.

3. Guided and Partial Graphic Organizers

Lee et al. (2013) highlight the benefits of using guided notes and partial graphic organizers to support young learners. Researchers often use the label “scaffolding” to describe this strategy. The goal is to offer guidance and reduce the “cognitive load” beginners face with a new skill. These tools reduce cognitive load by helping students focus on the most important information, rather than trying to capture everything at once.

For example:

- Provide students with partially completed notes that include blanks for them to fill in during a lesson.

- Use written prompts to guide students in identifying main points, summarizing content, and organizing their notes.

These strategies are particularly effective for elementary students, who may struggle to process and record information simultaneously. By reducing the mental effort required, guided notes and graphic organizers allow students to concentrate on understanding the material.

4. Focusing on Key Ideas, Keywords, and Text Structures

Chang & Ku (2014) emphasize the importance of teaching students to identify and use key ideas, keywords, and text structures in their notes. Their research with 4th graders provides several practical strategies for educators:

- Highlighting Main Ideas: Teach students to use titles, headings, and guiding questions to identify the most important information in a text.

- Recognizing Keywords: Help students identify function words like “however,” “because,” and “therefore,” which signal relationships between ideas.

- Using Visual Aids: Introduce charts, diagrams, and other visual tools to represent similarities, differences, and other relationships. For example, how are moths and butterflies the same and different?

- Analyzing Text Structures: Teach students to recognize organizational patterns, such as sequences or classifications. Is the author describing the steps in a process or the characteristics of a phenomenon or concept you should list in your notes.

These strategies not only improve the quality of students’ notes but also enhance their ability to understand and retain information.

5. Practice and Feedback

Finally, practice and feedback are essential for developing strong note-taking skills. Ilter (2017) recommends providing students with ample opportunities to practice taking notes independently. This practice should be paired with regular feedback from teachers and peers to help students refine their techniques.

For example:

- After a lesson, ask students to share their notes with a partner and discuss what they found most important.

- Provide specific feedback on how students can improve their notes, such as by adding more keywords or organizing information more clearly.

- Encourage students to revise their notes based on feedback and reflect on what they learned.

By creating a supportive environment where students can practice and receive constructive feedback, educators can help them build confidence and competence in their note-taking abilities.

The Integrated Process

Itar suggests a sequence educators can follow in working with students to develop these skills.

The Five-Step Instructional Model for Note-Taking

Ilter (2017) introduces a structured five-step approach to teaching note-taking, which can be applied to both reading and listening tasks. This model provides a clear framework for students to follow, making the process of taking notes more manageable and effective.

Step 1: Identify the Main Idea

Students should learn to highlight or underline important information and paraphrase it in their own words. Teaching them to use textual clues, such as headings or topic sentences, can help them pinpoint the main idea without resorting to verbatim copying.

Step 2: Information Reduction

Encourage students to condense paragraphs into essential points. This step helps them avoid excessive copying and focus on the most critical information.

Step 3: Keyword Identification

Teach students to recognize keywords that signal relationships between ideas, such as “because,” “however,” or “finally.” These words can help students understand the structure of the information and create meaningful connections in their notes.

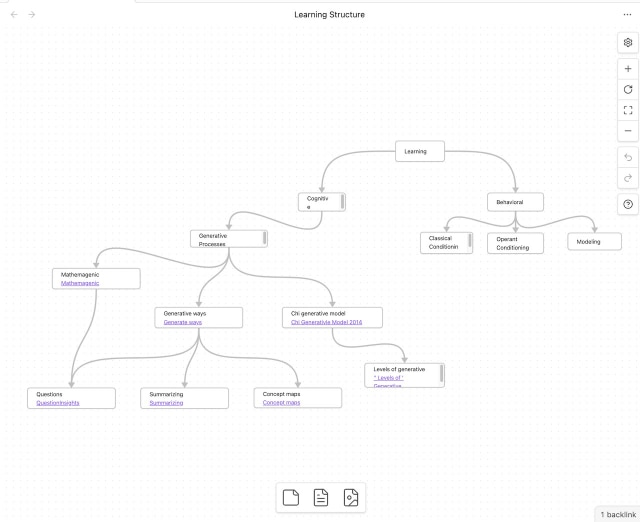

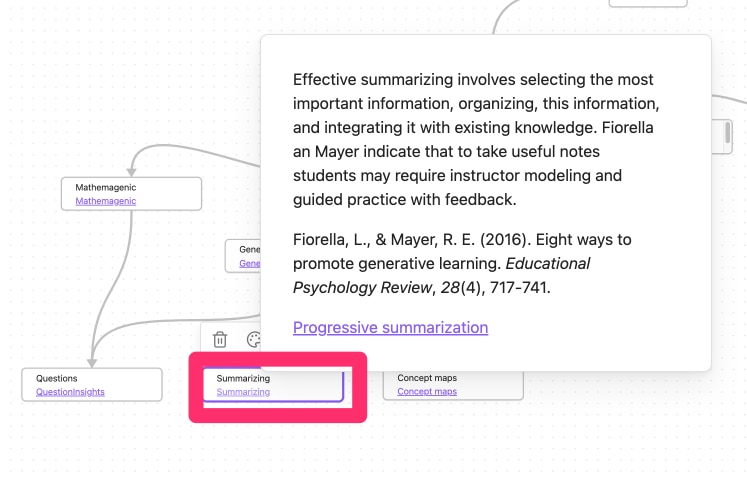

Step 4: Use of Representations

Introduce visual tools like symbols, charts, diagrams, or graphic organizers to help students organize their notes. These representations make it easier to see relationships between ideas and improve recall.

Step 5: Analysis of Text Structures

Help students recognize text structures, such as headings, subheadings, sequences, and classifications. Understanding these structures allows students to organize their notes more effectively and see how different pieces of information fit together.

Summary

This post is intended as an extension of my previous posts on note-taking focused on academic settings and younger learners. Beginning in approximately fourth grade, learners both read to learn and listen to brief teacher presentations. The skills of taking notes is an important life skill seldom directly taught to learners of any age. Researchers are proposing and describing how elementary and middle school teachers can help students begin to develop these skills.

References

Chang, W., & Ku, Y. (2014). The effects of note-taking skills instruction on elementary students’ reading. The Journal of Educational Research, 108(4), 278–291.

Ilter, I. (2017). Notetaking skills instruction for development of middle school students’ notetaking performance. Psychology in the Schools, 54(6), 596-611

Lee, P., Lan, W., Hamman, D. & Hendricks, B. (2008). The effects of teaching notetaking strategies on elementary students’ science learning. Instructional Science, 36(3), 191–201.

![]()

You must be logged in to post a comment.