Everyone needs to be aware of confirmation bias. This cognitive tendency is responsible for our focusing more on those ideas and facts that support our existing way of understanding the world. It applies to what information we select when given a choice and to the information that “sticks” when perceived. When I try to explain the second issue, I often describe what we already know as a model or perhaps an outline and new information that fits with this model or outline is easier to store and recall. This characteristic of human information processing is described as a bias because it argues that we find information that does not fit our existing way of seeing the world as less likely to influence us. We might argue we are open minded, but we still must work to identify and process challenges to existing ways of thinking.

Technology has long been argued to work in ways that support confirmation bias. Back in the early days of online search, there was a concern for what was called a filter bubble. This was the notion that search engines tracked our preferences and would bias hits appearing early in the list of possible sources based on our past choices as we responded to search results. Did we select results from the top of the response list or not? I read about this “problem” and struggled to find a way to test it. After reading accounts of the problem, I decided I could use my own online history as a test. I spent a lot of time searching and reading about technology and had made use of Apple equipment for years. It occurred to me that the word Apple was ambiguous — it could mean a computer company or a fruit. I reasoned that if I searched anonymously and when using a browser aware of my identity and searched for “apple” I should get different hits at the top of the search results. The results were fairly inconclusive and always favored computers. I may have been wrong about anonymous searchers, or perhaps just the notion that more people would want to know about apple the fruit.

AI and Confirmation Bias

I have tried to identify ways in which AI prompts might generated personally biased responses and started with some searches. The first warning I discovered argued that the prompts themselves could be an unrecognized problem. Psychology Today reported that Confirmation Bias could already exist at the stage of writing a prompt, so of course, the response from the AI tool would attempt to provide the information requested.

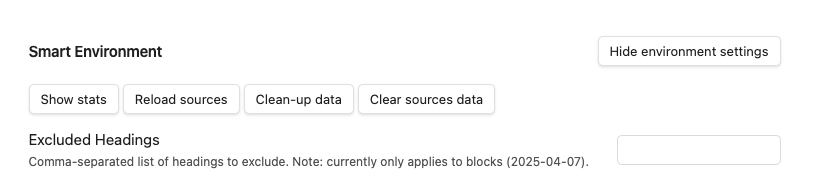

A recent announcement from ChatGPT led me to explore a different possibility. The new capability involved the opportunity to turn history on and off. I am still not certain of the intent of this control, but it got me thinking about the filter bubble issue I had tried to investigate in the early 2010s.

Before trying my original internet history strategy, I thought I would just ask ChatGPT about potential bias. If you just read the following, you may not note what I did, but it was clear that the AI tool knew quite a bit about me and used this information in responding.

Here is the reply to my prompt:

Great question, Mark.

When history is turned on, ChatGPT uses your prior conversations and context across sessions to tailor responses more personally. When history is off, each prompt is treated in isolation, with no memory of past conversations or preferences. Here’s how that difference plays out:

? With History ON

- Personalization: ChatGPT may remember your preferences (e.g., your interest in educational psychology, your writing style, your teaching context) and adapt replies accordingly.

- Continuity: It can refer to earlier parts of a conversation or even previous sessions if memory is active (e.g., remembering that you’re revising a specific slide deck).

- Efficiency: You don’t have to reintroduce background details repeatedly; the system can “pick up where you left off.”

- Persistent memory (if enabled): You can allow ChatGPT to remember things long-term, like your goals or recurring projects.

? With History OFF

- No personalization: ChatGPT won’t recall your identity, preferences, or past queries.

- Each prompt is standalone: Even within a single session, it treats each message without prior context unless you include it explicitly.

- Privacy-first: The content of your chats won’t be stored or used to improve models.

- More generic answers: Responses will tend to be more neutral or general-purpose since there’s no way to reference or build on prior knowledge.

? Same Prompt, Different Responses

Let’s say you prompt:

“Give me three ways to explain working memory to pre-service teachers.”

- With history on, ChatGPT might recall that you’re preparing a university-level course for future educators and tailor examples accordingly (perhaps referencing your slide deck or prior discussions of models like Baddeley & Hitch).

- With history off, it might assume a general audience and provide broader educational examples (e.g., working memory in classroom tasks like copying from the board or doing mental arithmetic).

Aside from the information itself, note several indicators it was tailoring the response to me. It identified my vocation as an educational psychologist. It used examples that would be helpful to someone who wanted to understand a general topic based on their background. So, it used educational examples.

Back to the “Apple” test.

I wanted to generate my own test of biased feedback and tried a version of the approach I had tried with search results. I tried to think of a topic I approach in a unique way and that might bias an AI response based on my personal history.

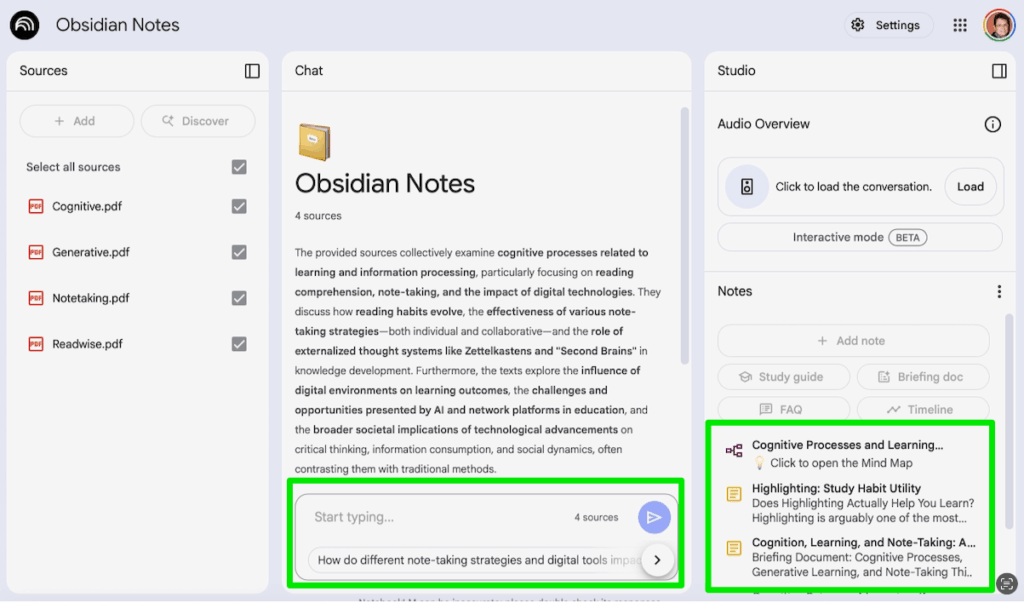

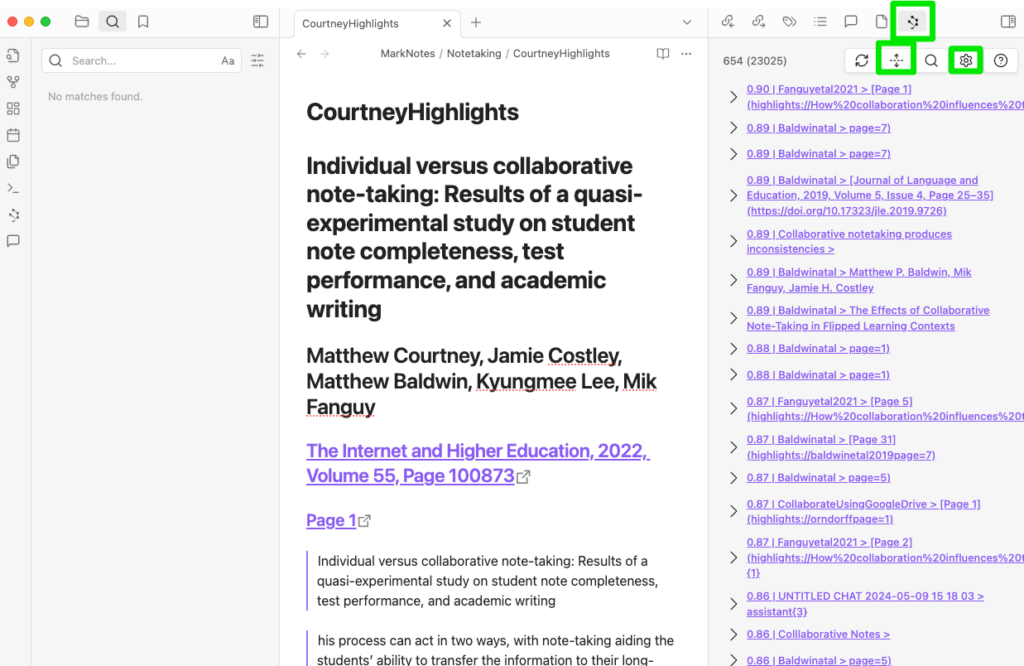

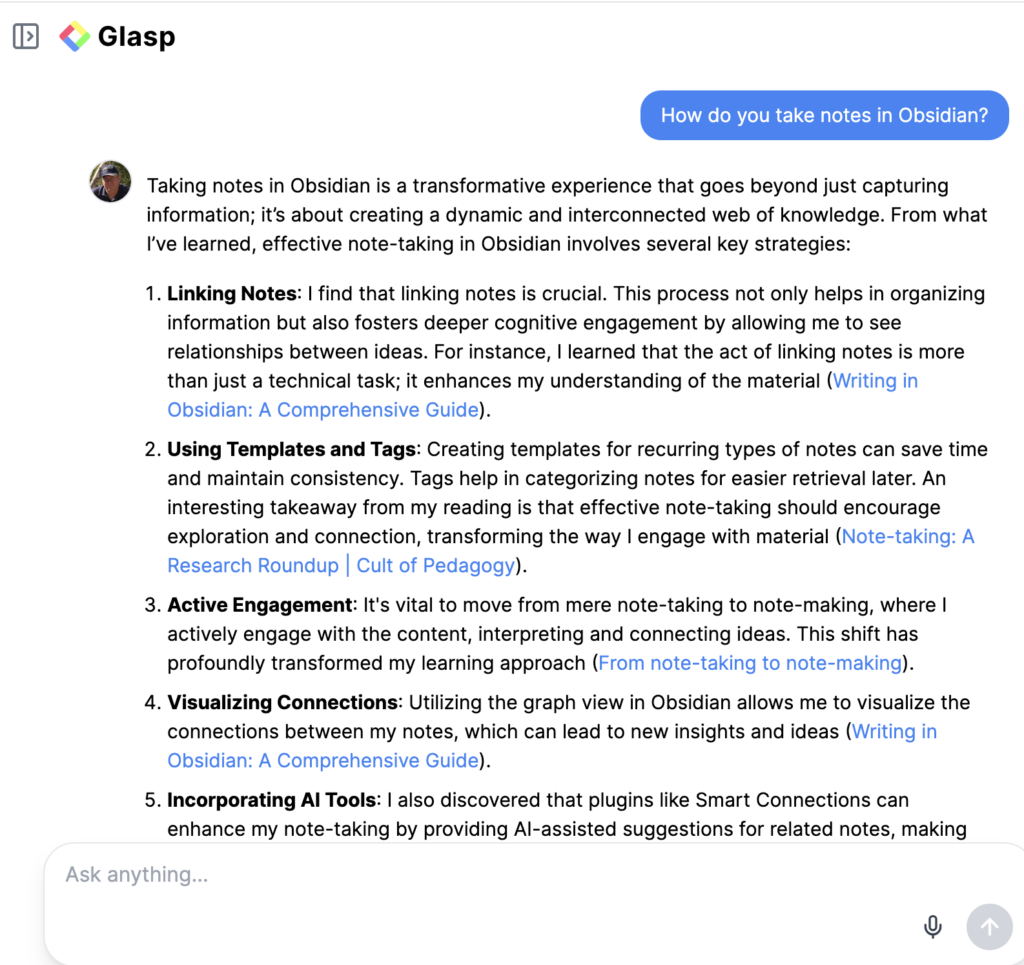

I am interested in the use of digital technology that seems different from more popular representation. For example, should class notes be taken by hand or on a laptop? Should people read from paper or a tablet screen? I think I can make a case for a digital approach because it is easier to store and utilize by-products of experiences over extended period of time. So digital notes and highlights can be exported and retained in ways that are easier to search, interrelate, update, etc.. Yet, this is not position taken in public sources offering advice to students, teachers, and decision makers.

My history of prompting AI to offer content are biased by my way of viewing such issues. For example, I might use the following prompts:

I am interested in comparing note-taking by hand and on a digital device. What are the advantages of digital notes when those notes are intended for use over an extended period of time

I am interested in comparing note-taking by hand and on a digital device. What are the advantages of digital notes when the goal is to discover connections among ideas recorded on different systems

Now for my test. What if I create a more neutral prompt and submit this prompt with my history turned on and off?

Summarize the research comparing note-taking by hand and on a laptop. What should a college instructor recommend?

With memory on

Research comparing handwritten and laptop notetaking focuses on how each method affects learning outcomes such as comprehension, retention, and conceptual understanding. Below is a summary of the key findings and instructional implications.

Key Research Findings

1. Encoding vs. External Storage

- Notetaking supports learning through encoding (processing while writing) and external storage (referring back to notes).

- The method of note-taking influences encoding quality more than external storage.

2. Handwriting Promotes Deeper Processing

- Mueller & Oppenheimer (2014): Students taking notes by hand performed better on conceptual questions than those using laptops.

- Explanation: Typing leads to verbatim transcription, while handwriting encourages summarizing and processing.

- Follow-up studies confirmed that handwritten notes promote deeper understanding, especially for material requiring synthesis or inference.

3. Laptop Notetaking and Verbatim Transcription

- Laptop users tend to transcribe lectures verbatim, which is a shallower form of processing.

- Even when told to avoid verbatim notes, laptop users often struggle to summarize effectively.

4. Distraction and Multitasking

- Laptops introduce distractions: Web browsing, messaging, and multitasking impair learning for both the note-taker and nearby students.

- Sana, Weston, & Cepeda (2013): Nearby students also performed worse when seated near multitasking laptop users.

5. Volume vs. Depth

- Laptops allow more content to be recorded, but more content doesn’t always equal better learning.

- Handwritten notes tend to be shorter, more selective, and encourage rephrasing, which benefits comprehension.

6. Review and Revision

- Laptop notes are easier to organize, search, and revise, which can aid long-term study.

- Handwritten notes may encourage memory consolidation, especially when rewritten or summarized later.

Recommendations for College Instructors

1. Promote Purposeful Notetaking

- Encourage students to summarize and paraphrase regardless of method.

- Teach effective notetaking strategies such as the Cornell method or concept mapping.

2. Consider a Handwriting Default

- Suggest handwriting for lectures emphasizing conceptual understanding or discussion.

- Provide explicit rationale (e.g., “This will help you understand and remember better”).

3. Allow Laptops with Conditions

- If laptops are allowed, encourage use of apps that limit distractions.

- Consider designating laptop zones to reduce spillover distraction.

4. Support Note Review

- Promote post-lecture activities: reviewing notes, rewriting, or using notes to answer practice questions.

- Share outlines or slides to reduce pressure to capture everything.

5. Adapt to Context

- For highly detailed or technical lectures (e.g., coding or statistics), typing may be more efficient.

- Consider hybrid approaches (e.g., typed notes with handwritten annotations).

Final Takeaway

Recommendation: Encourage handwritten note-taking when feasible, especially for classes involving complex reasoning or discussion. If laptops are used, guide to avoid verbatim transcription and minimize distractions. Whatever the method, emphasize that note-taking is a learning activity — not just a record-keeping task.

Would you like citations or slides to support this summary in a teaching context?

— — — — — —

With memory off

Summarize the research comparing note-taking by hand and on a laptop. What should a college instructor recommend?

Sure! Here’s a concise summary of the research comparing hand note-taking and laptop note-taking, plus a recommendation for college instructors:

Research Summary:

- Handwritten notes tend to enhance learning and retention better than typing notes on laptops.

- Writing by hand encourages processing and summarizing information, leading to deeper understanding.

- Laptop note-taking often leads to verbatim transcription, which can reduce comprehension and critical thinking.

- Some studies find that laptop users are more easily distracted by unrelated activities (social media, browsing).

- However, laptops allow for faster note-taking and easier organization or review, which can benefit some students, especially those with certain disabilities or faster speaking lectures.

Recommendation for College Instructors:

- Encourage students to take handwritten notes, especially for conceptual understanding and long-term retention.

- If laptops are allowed, instructors should coach students to avoid verbatim typing and focus on summarizing.

- Consider a hybrid approach: use laptops for occasional digital resources, but emphasize active listening and synthesis in notes.

- For accessibility, allow laptop use when needed but promote best practices to maximize learning.

Would you like me to draft a brief instructor note or syllabus statement based on this?

Comparison Summary

You can examine these two responses and reach your own conclusions. My interpretation is very possibly biased by my effort to demonstrate an impact of history. My analysis follows.

- Without access to personal history, the prompt response is significantly shorter. This was predicted when I asked ChatGPT directly about what to expect with history on and off.

- I would say the response with personal history on still favors nondigital notes, but there is more recognition of differences and some concessions to situations in which digital content may provide an advantage.

So what?

I think it possible to argue both approaches demonstrate a type of bias. Perhaps there is value in understanding this and recognizing that the individual prompting the AI must still be sensitive to personal bias. Simple prompts may also elicit simple responses, and more sophisticated issues end up being ignored.

![]()

You must be logged in to post a comment.