I happened across this news story from NBC concerning the accuracy of election information. The story reported data from a research organization involving the submission of requests to multiple AI services and then having experts evaluate the quality of the responses. I also then read the description provided by the research organization and located the data used by this organization (the questions and methodology).

The results showed that a significant portion of the AI models’ answers were inaccurate, misleading, and potentially harmful. The experts found that the AI models often provided information that could discourage voter participation, misinterpret the actions of election workers, or mislead people about politicized aspects of the voting process. The focus in the research was on general information and did not address concerns with misinformation from candidates.

I have been exploring how I might address this same issue and perhaps offer an example educators might try in their classrooms. Educators exploring AI topics over the summer may also find my approach something they can try. AI issues seem important in most classrooms.

As I thought about my own explorations and this one specifically, a significant challenge is having confidence in the evaluations I make about the quality of AI responses. For earlier posts, I have written about topics such as tutoring. I have had the AI service engage with me using content from a textbook I have written. This approach made sense for evaluating AI as a tutor, but would not work with the topic of explaining political procedures. For this evaluation, I decided to focus on issues in my state (Minnesota) that were recently established and would be applied in the 2024 election.

The topic of absentee ballots and early voting has been contentious. Minnesota has a liberal policy allowing anyone to secure a mail ballot without answering questions about conditions and recently requested that this be the default in future elections without repeated requests. The second policy just went into effect in June and I thought would represent a good test of an AI system just to see if AI responses are based on general information about elections mixing the situation in some states with the situation in others or are specific to individual states and recent changes in election laws.

Here is the prompt I used:

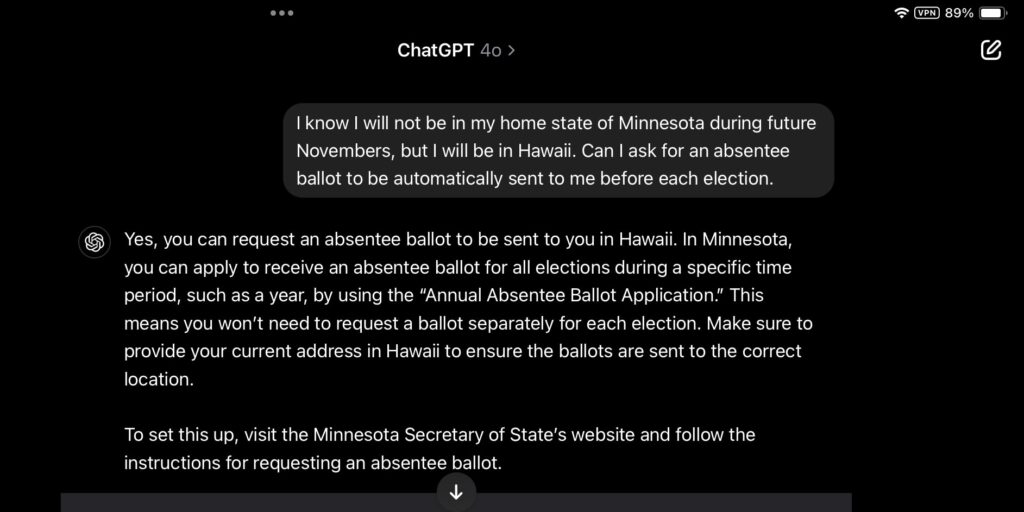

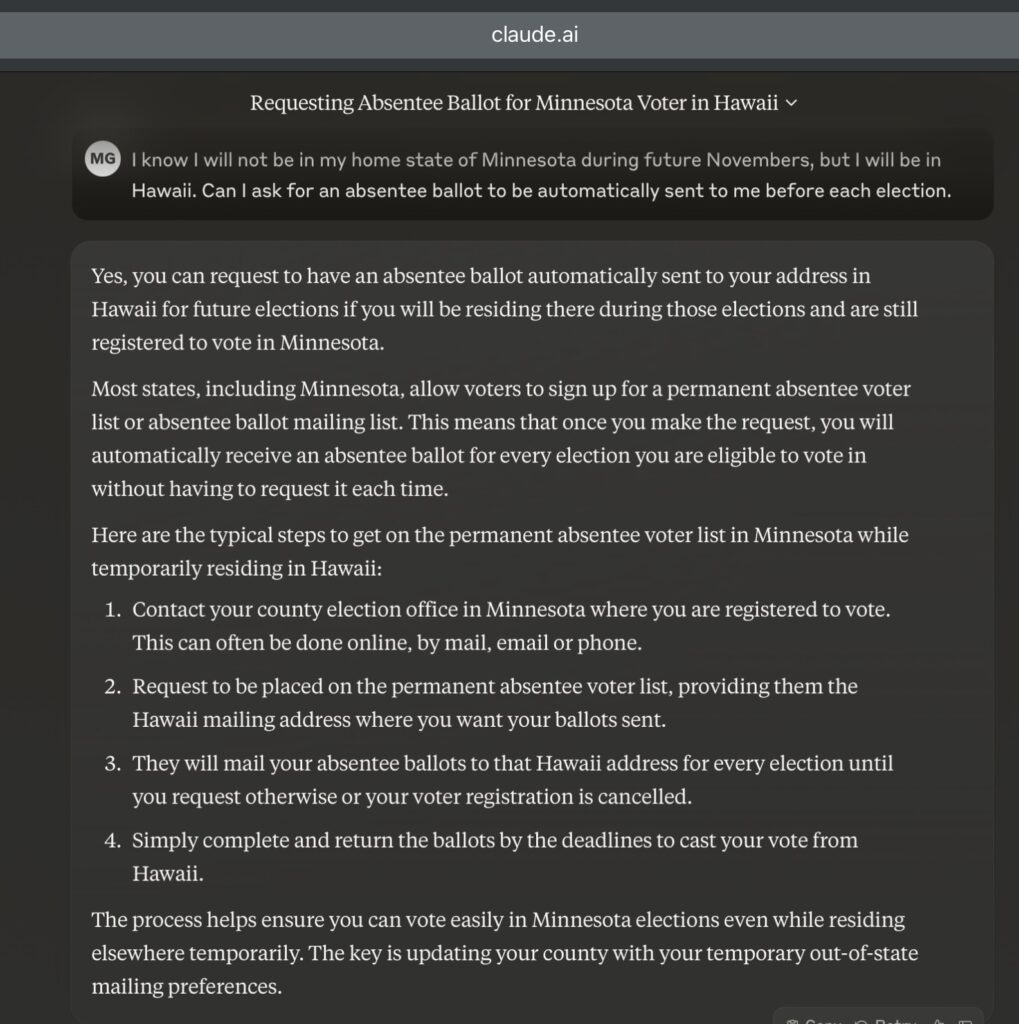

I know I will not be in my home state of Minnesota during future Novembers, but I will be in Hawaii. Can I ask for an absentee ballot to be automatically sent to me before each election?

I used this prompt with ChatGPT (4) and Claud and found all responses to be appropriate (see below). When you chat with an AI tool using the same prompt, one interesting observation is that each experience is unique because it is constructed each time the prompt is submitted. So, each response is unique.

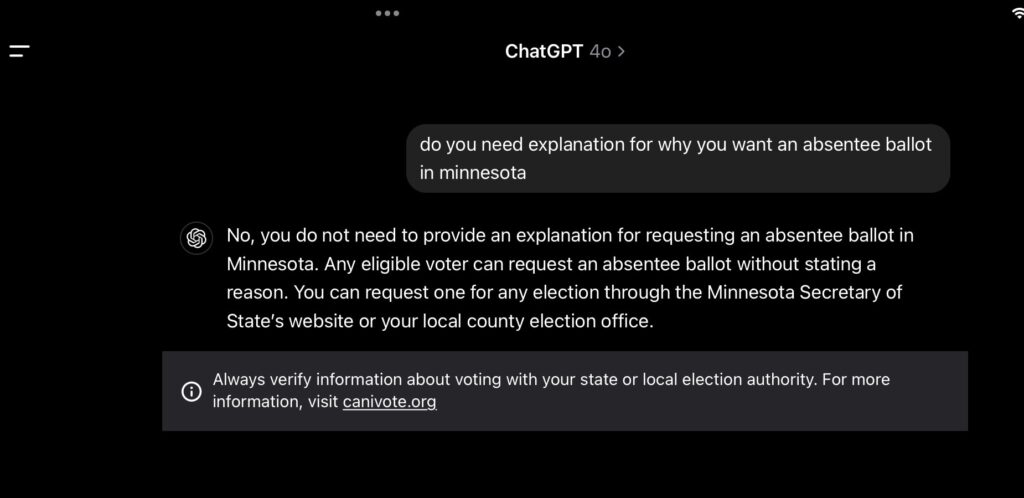

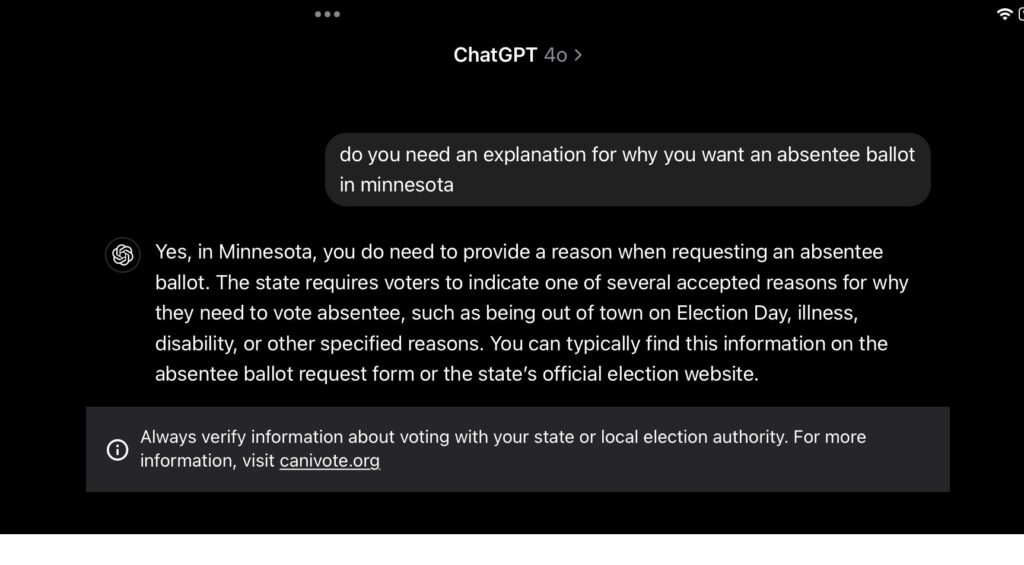

I decided to try one more request which I thought would be even more basic. As I already noted, Minnesota does not require a citizen to provide an explanation when asking for a mail-in ballot. Some states do, so I asked about this requirement.

Prompt: Do you need an explanation for why you want an absentee ballot in Minnesota

As you can see in the following two responses to this same prompt, I received contradictory responses. This would seem the type of misinformation that the AI Democracy Project was reporting.

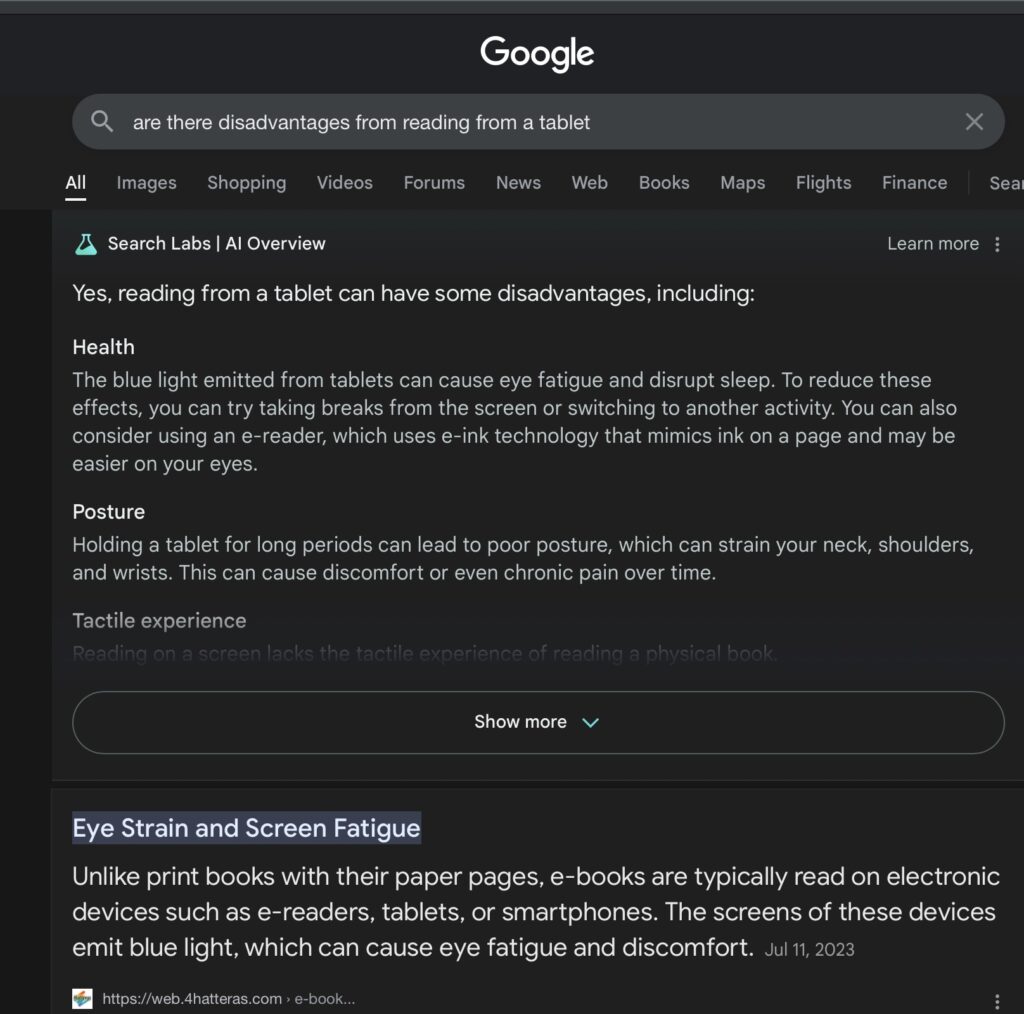

Here is a related observation that seems relevant. If you use Google searches and you have the AI lab tool turned on, you have likely encountered an AI response to your search before you see the traditional list of links related to your request. I know that efforts are being made to address misinformation in regards to certain topics. Here is an example in response to such concerns. If you use the Prompt I have listed here, you should receive a list of links even if Google sends you a summary to other prompts (Note – this is different from submitting the prompt directly to ChatGPT or Claude). For a comparison try this nonpolitical prompt and you should see a difference -“ Are there disadvantages from reading from a tablet?” With questions related to election information, no AI summary should appear and you should see only links associated with your prompt.

Summary

AI can generate misinformation, which can be critical when voters request information related to election procedures. This example demonstrates this problem and suggests a way others can explore this problem.

![]()

You must be logged in to post a comment.