The issue I have with streaming television services is the same as the issue I have with services that support my personal knowledge management – many have a feature or two that I find helpful, but when should I stop paying to add another feature I might use? Exploring the pro version of AI tools so I can write based on experience is one thing, but what is a reasonable long-term commitment to multiple subscriptions for the long term in my circumstances?

My present commitments are as follows:

- ChatGPT – $20 a month

- Perplexity – $20 a month

- Scispace – $12 a month

- Smart Connections – $3-5 a month for ChatGPT API

Those who follow me on a regular basis probably have figured out my circumstances. I am a retired academic who wants to continue writing for what can most accurately be described as a hobby. There are ads on my blogs and I post to Medium, but any revenue I receive is more than offset by my server costs and the Medium subscription fee. So, let’s just call it a hobby.

The type of writing I do varies. Some of my blog posts are focused on a wide variety of topics mostly based on personal opinions buttressed by a few links. My more serious posts are intended for practicing educators and are often based on my review of the research literature. Offering citations that back my analyses is important to me even if readers seldom follow up by reading the cited literature themselves. I want readers to know my comments can be substantiated.

I don’t make use of AI in my writing. The exception would be that I use Smart Connections to summarize the research notes I have accumulated in Obsidian and I sometimes include these summaries. I rely on two of these AI tools (SciSpace and Perplexity) to find research articles relevant to topics I write about. With the proliferation of so many specialized journals, this has become a challenge for any researcher. There is an ever expanding battery of tools one can use to address this challenge and this post is not intended to offer a general review of this tech space. What I offer here is an analysis of a smaller set of services I hope identifies issues others may not have considered.

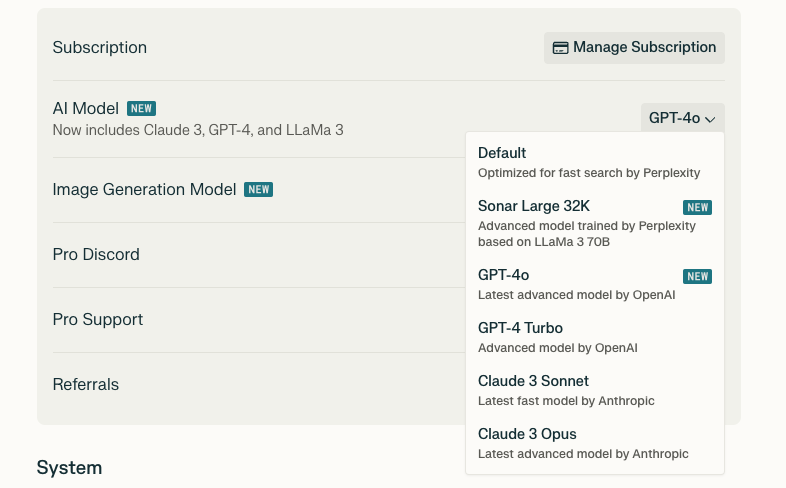

Here are some issues that add to the complexity of making a decision about the relative value of AI tools. There are often free and pro versions of these tools. The differences vary. Sometimes you have access to more powerful/recent versions of the AI. Sometimes the Pro version is the same as the free version, but you have no restrictions on the frequency of use. Occasionally other features such as exporting options or online storage of past activities become available in the pro version. Some differences deal with convenience and there are workarounds (eg., copying from the screen with copy and paste vs exporting).

Services differ in the diversity of tools included and this can be important when selecting several services from a collection of services in comparison to committing to one service. Do you want to generate images to accompany content you might write based on your background work? Do you want to use AI to write for you or perhaps to suggest a structure and topics for you something you might write yourself?

There can also be variability in how well a service does a specific job. For example, I am interested in a thorough investigation of the research literature. What insights related to the individual articles identified are available that can be helpful in determining which articles I should spend time reading?

Perplexity vs. SciSpace

I have decided that Perplexity is the most expendable of my present subscriptions. What follows is the logic for this personal decision and an explanation of how it fits my circumstances.

I am using a common prompt for this sample comparison

What does the research conclude related to the value of studying lecture notes taken by hand versus notes taken on a laptop or tablet?

Perplexity

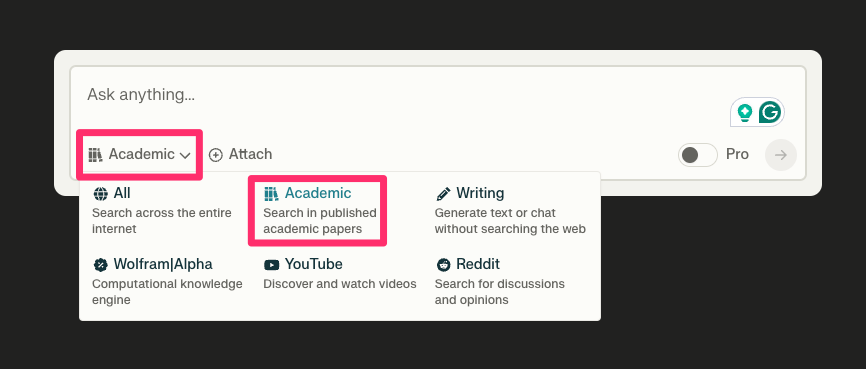

I can see how Perplexity provides a great service for many individuals who have broad interests. I was originally impressed when I discovered that Perplexity allowed me to focus its search process on academic papers. When I first generated a prompt, I received mostly sources from Internet-based authors on the topics that were of interest to me and as I have indicated, I was more interested in the research published in journals.

I mentioned that there is a certain redundancy of functions across my subscriptions and the option of writing summaries or structuring approaches I might take in my own writing using different LLMs was enticing.

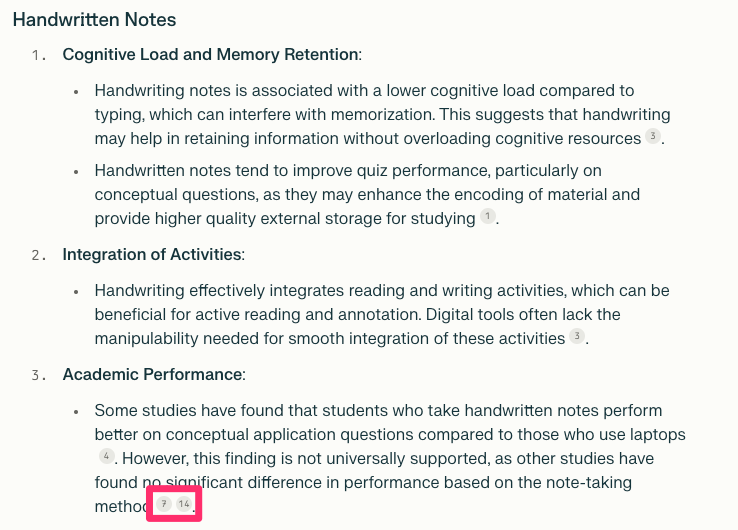

The characteristic I value in both Perplexity and SciSpace is that summary statements are linked to sources. A sample of the output from Perplexity appears below (the red box encloses links to sources).

When the content is exported, the sources appear as shown below.

Citations:

[1] https://www.semanticscholar.org/paper/d6f6a415f0ff6e6f315c512deb211c0eaad66c56

[2] https://www.semanticscholar.org/paper/ab6406121b10093122c1266a04f24e6d07b64048

[3] https://www.semanticscholar.org/paper/0e4c6a96121dccce0d891fa561fcc5ed99a09b23

[4] https://www.semanticscholar.org/paper/3fb216940828e27abf993e090685ad82adb5cfc5

[5] https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10267295/

[6] https://www.semanticscholar.org/paper/dac5f3d19f0a57f93758b5b4d4b972cfec51383a

[7] https://pubmed.ncbi.nlm.nih.gov/34674607/

[8] https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8941936/

[9] https://www.semanticscholar.org/paper/43429888d73ba44b197baaa62d2da56eb837eabd

[10] https://www.semanticscholar.org/paper/cb14d684e9619a1c769c72a9f915d42ffd019281

[11] https://www.semanticscholar.org/paper/17af71ddcdd91c7bafe484e09fb31dc54623da22

[12] https://www.semanticscholar.org/paper/8386049efedfa2c4657e2affcad28c89b3466f0b

[13] https://www.semanticscholar.org/paper/1897d8c7d013a4b4f715508135e2b8dbce0efdd0

[14] https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9247713/

[15] https://www.semanticscholar.org/paper/d060c30f9e2323986da2e325d6a295e9e93955aa

[16] https://www.semanticscholar.org/paper/b41dc7282dab0a12ff183a873cfecc7d8712b9db

[17] https://www.semanticscholar.org/paper/0fe98b8d759f548b241f85e35379723a6d4f63bc

[18] https://www.semanticscholar.org/paper/9ca88f22c23b4fc4dc70c506e40c92c5f72d35e0

[19] https://www.semanticscholar.org/paper/47ab9ec90155c6d52eb54a1bb07152d5a6b81f0a

[20] https://pubmed.ncbi.nlm.nih.gov/34390366/

I went through these sources and the results are what I found disappointing. I have read most of the research studies on this topic and have specific sources I expected to see. The sources produced were from what I would consider low value sources when I know better content is available. These are not top tier educational resource journals.

- Schoen, I. (2012). Effects of Method and Context of Note-taking on Memory: Handwriting versus Typing in Lecture and Textbook-Reading Contexts. [Senior thesis]

- Emory J, Teal T, Holloway G. Electronic note taking technology and academic performance in nursing students. Contemp Nurse. 2021 Apr-Jun;57(3-4):235-244. doi: 10.1080/10376178.2021.1997148. Epub 2021 Nov 8. PMID: 34674607.

- Wiechmann W, Edwards R, Low C, Wray A, Boysen-Osborn M, Toohey S. No difference in factual or conceptual recall comprehension for tablet, laptop, and handwritten note-taking by medical students in the United States: a survey-based observational study. J Educ Eval Health Prof. 2022;19:8. doi: 10.3352/jeehp.2022.19.8. Epub 2022 Apr 26. PMID: 35468666; PMCID: PMC9247713.

- Crumb, R.M., Hildebrandt, R., & Sutton, T.M. (2020). The Value of Handwritten Notes: A Failure to Find State-Dependent Effects When Using a Laptop to Take Notes and Complete a Quiz. Teaching of Psychology, 49, 7 – 13.

- Mitchell, A., & Zheng, L. (2019). Examining Longhand vs. Laptop Debate: A Replication Study. AIS Trans. Replication Res., 5, 9.

- Emory J, Teal T, Holloway G. Electronic note taking technology and academic performance in nursing students. Contemp Nurse. 2021 Apr-Jun;57(3-4):235-244. doi: 10.1080/10376178.2021.1997148. Epub 2021 Nov 8. PMID: 34674607.

SciSpace

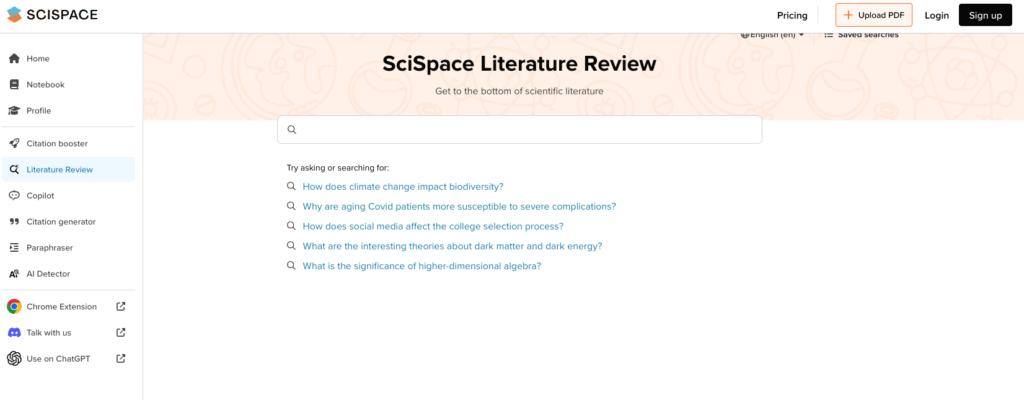

SciSpace was developed as more focused on the research literature.

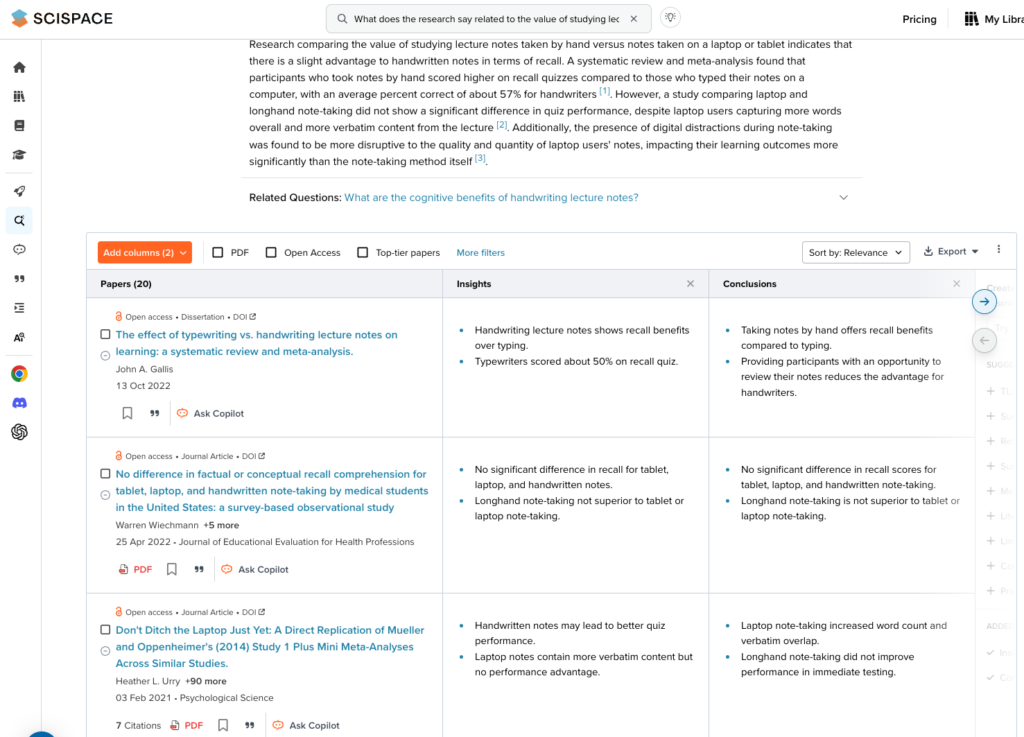

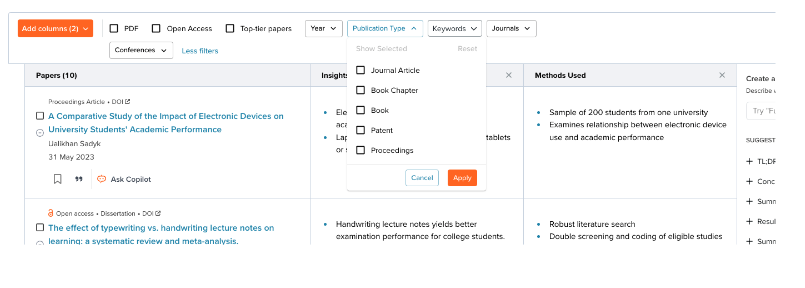

The output from the same prompt generated a summary and a list of 90 citations (see below). Each citations appears with characteristics from a list available to the user. These supplemental comments are useful in determining which citations I may wish to read in full. Various filters can be applied to the original collection that help narrow the output. Also included are ways to designate the recency of the publications to be displayed and to limit the output to journal articles.

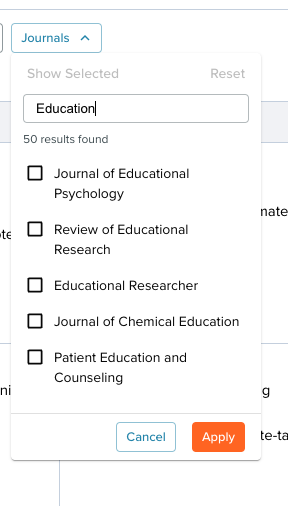

The journals that SciSpace accesses can be reviewed and I was pleased to see that what I consider the core educational research journals are covered.

Here is the finding I found most important. SciSpace does provide many citations from open-access journals. These are great, but I was most interested in what was generated from the main sources I knew should be there. These citations were included.

Linlin, Luo., Kenneth, A., Kiewra., Abraham, E., Flanigan., Markeya, S., Peteranetz. (2018). Laptop versus longhand note taking: effects on lecture notes and achievement. Instructional Science, 46(6):947-971. doi: 10.1007/S11251-018-9458-0

Pam, Mueller., Daniel, M., Oppenheimer. (2014). The Pen Is Mightier Than the Keyboard Advantages of Longhand Over Laptop Note Taking. Psychological Science, 25(6):1159-1168. doi: 10.1177/0956797614524581

Dung, C., Bui., Joel, Myerson., Sandra, Hale. (2013). Note-taking with computers: Exploring alternative strategies for improved recall. Journal of Educational Psychology, 105(2):299-309. doi: 10.1037/A0030367

Summary

This post summarizes my thoughts on which of multiple existing AI-enabled services I should retain to meet my personal search and writing interests. I found SciSpace superior to Perplexity when it came to identifying prompt-relevant journal articles. Again, I have attempted to be specific about what I use AI search to accomplish and your interests may differ.

![]()