In a recent episode of the EdTech Situation Room, host Jason Neiffer made a very brief observation that educators could improve the effectiveness of AI-generated multiple-choice questions by adding a list of rules the AI tool should apply when writing the questions. This made sense to me. I understood the issue that probably led to this recommendation. I have written multiple times that students and educators can use AI services to generate questions of all types. In my own experience doing this, I found too many of the questions used structures I did not like and I found myself continually requesting rewrites excluding a type of question I found annoying. For example, questions that involved a response such as “all of the above” or a question stem asking for a response that was “not correct”. Taking a preemptive approach made some sense and set me on the exploration of how this idea might be implemented. Neiffer proposed an approach that involved making use of an online source for how to write quality questions. I found it more effective to maybe review such sources, but to put together my own list of explicit rules.

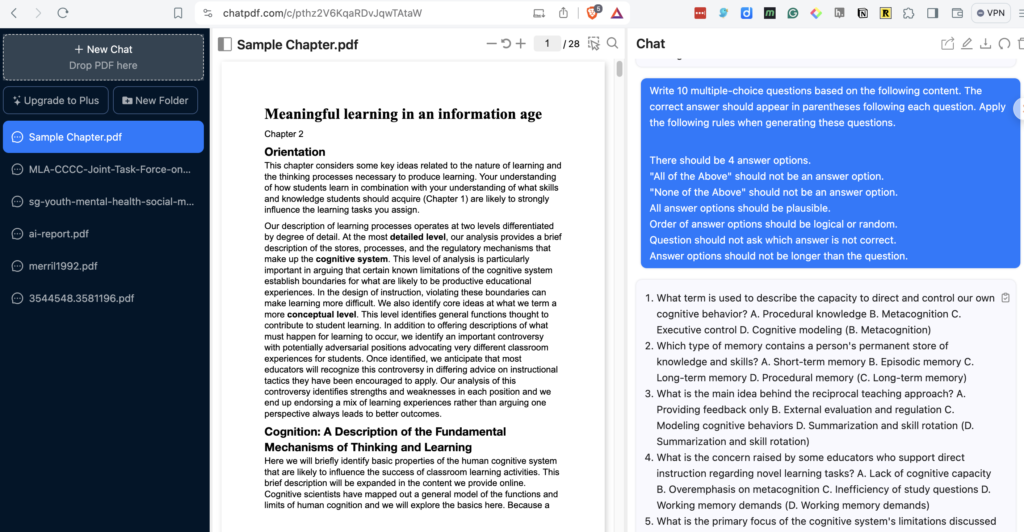

My approach used a prompt that looked something like this:

Write 10 multiple-choice questions based on the following content. The correct answer should appear in parentheses following each question. Apply the following rules when generating these questions.

There should be 4 answer options.

“All of the Above” should not be an answer option.

“None of the Above” should not be an answer option.

All answer options should be plausible.

Order of answer options should be logical or random.

Question should not ask which answer is not correct.

Answer options should not be longer than the question.

I would alter the first couple of sentences of this prompt if I was asking the AI service to use its own information base or I wanted to include a content source that should be the focus of the questions. If I was asking for questions generated based on the large language content alone, I would include a comment about the level of the students who would be answering the questions (e.g., high school students). For example, questions about mitosis and meiosis without this addition would include concepts I did not think most high school sophomores would have covered. When providing the AI service the content to be covered, I did not use this addition.

Questions based on a chapter

I have been evaluating the potential of an AI service to function as a tutor by interacting with a chapter of content. My wife and I have written a college textbook so I have authentic content to work with. The chapter is close to 10,000 words in length. In this case, I loaded this content and the prompt into ChatPDF, NotebookLM and ChatGPT. I pay $20 a month for ChatGPT and the free versions of the other two services. All proved to be effective.

ChatPDF

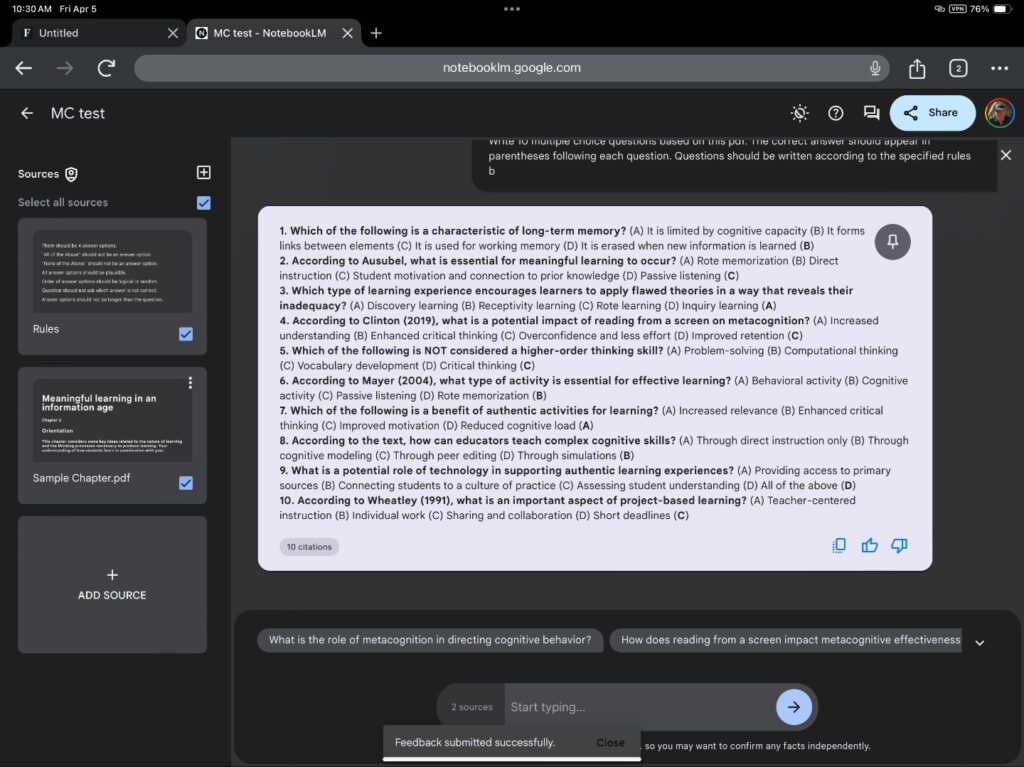

NotebookLM

With NotebookLM, you are allowed to upload multiple files that a prompt uses as a focus for the chat. For some reason rather than including my entire prompt, I had better results (suggested by the service) when I included the rules I wanted the system to apply as a second source rather than as part of the prompt.

ChatGPT

The process works a little differently with ChatGPT. I first copied the text from the pdf and pasted this content into the prompt window. I then scrolled to the beginning of this content and added my prompt. I could then ask the service to produce multiple question samples by asking for another 10 or 20 questions. I found some interesting outcomes when asking for multiple samples of questions. Even the format of the output sometimes changed (see the position of the answer in the following two examples).

**4. According to Clinton (2019), what is a potential impact of reading from a screen on metacognition?**

(A) Increased understanding

(B) Enhanced critical thinking

(C) Overconfidence and less effort

(D) Improved retention

(**C**)

**7. Which skill is considered a “higher order thinking skill”?**

(A) Word identification

(B) Critical thinking (**Correct**)

(C) Fact memorization

(D) Basic calculation

From sample to sample, some of the rules I asked ChatGPT to use were ignored. This slippage seemed unlikely in the initial response to the prompt.

What is an important consideration when designing project-based learning activities?**

(A) The amount of time available to students

(B) The availability of resources

(C) The level of student autonomy

(D) All of the above

(**D**)

Summary

The quality of multiple-choice questions generated using AI tools can be improved by adding rules for the AI service to follow as part of the prompt to generate questions. I would recommend that educators wanting to use the approach I describe here generate their own list of rules depending on their preferences. The questions used on an examination should always be selected for appropriateness, but the AI-based approach is a great way to easily generate a large number of questions to serve as a pool from which an examination can be assembled. Multiple choice exams should include a range of question types and it may be more efficient to write application questions because an educator would be in the best position to understand the background of students and determine what extension beyond the content in the source material would be appropriate.

![]()

You must be logged in to post a comment.